- Homepage

- >

- Search Page

- > Slider

- >

Mitigating the Impacts of Climate Change: How EU HPC Centres of Excellence Are Meeting the Challenge

“The past seven years are on track to be the seven warmest on record,” according to the World Meteorological Organization. Furthermore, the earth is already experiencing the extreme weather consequences of a warmer planet in the forms of record snow in Madrid, record flooding in Germany and record wildfires in Greece in 2021 alone. Although EU HPC Centres of Excellence (CoEs) help to address current societal challenges like the Covid-19 pandemic, you might wonder, what can the EU HPC CoEs do about climate change? For some CoEs, the answer is fairly obvious. However just as with Covid-19, the contributions of other CoEs may surprise you!

Given that rates of extreme weather events are already increasing, what can EU HPC CoEs do to help today? The Centre of Excellence in Simulation of Weather and Climate in Europe (ESiWACE) is optimizing weather and climate simulations for the latest HPC systems to be fast and accurate enough to predict specific extreme weather events. These increasingly detailed climate models have the capacity to help policy makers make more informed decisions by “forecasting” each decision’s simulated long-term consequences, ultimately saving lives. Beyond this software development, ESiWACE also supports the proliferation of these more powerful simulations through training events, large scale use case collaborations, and direct software support opportunities for related projects.

Even excepting extreme weather and long-term consequences, though, climate change has other negative impacts on daily life. For example the World Health Organization states that air pollution increases rates of “stroke, heart disease, lung cancer, and both chronic and acute respiratory diseases, including asthma.” The HPC and Big Data Technologies for Global Systems Centre of Excellence (HiDALGO) exists to provide the computational and data analytic environment needed to tackle global problems on this scale. Their Urban Air Pollution Pilot, for example, has the capacity to forecast air pollution levels down to two meters based on traffic patterns and 3D geographical information about a city. Armed with this information and the ability to virtually test mitigations, policy makers are then empowered to make more informed and effective decisions, just as in the case of HiDALGO’s Covid-19 modelling.

What does MAterials design at the eXascale have to do with climate change? Among other things, MaX is dramatically speeding up the search for materials that make more efficient, safer, and smaller lithium ion batteries: a field of study that has had little success despite decades of searching. The otherwise human intensive process of finding new candidate materials moves exponentially faster when conducted computationally on HPC systems. Using HPC also ensures that the human researchers can focus their experiments on only the most promising material candidates.

Continuing with the theme of materials discovery, did you know that it is possible to “capture” CO2 from the atmosphere? We already have the technology to take this greenhouse gas out of our air and put it back into materials that keep it from further warming the planet. These materials could even be a new source of fuel almost like a man-made, renewable oil. The reason this isn’t yet part of the solution to climate change is that it is too slow. In answer, the Novel Materials Discovery Centre of Excellence (NoMaD CoE) is working on finding catalysts to speed up the process of carbon capture. Their recent success story about a publication in Nature discusses how they have used HPC and AI to identify the “genes” of materials that could make efficient carbon-capture catalysts. In our race against the limited amount of time we have to prevent the worst impacts of climate change, the kind of HPC facilitated efficiency boost experienced by MaX and NoMaD could be critical.

Once one considers the need of efficiency, it starts to become clear what the Centre of Excellence for engineering applications EXCELLERAT might be able to offer. Like all of the EU HPC CoEs, EXCELLERAT is working to prepare software to run on the next generation of supercomputers. This preparation is vital because the computers will use a mixture of processor types and be organized in a variety of architectures. Although this variety makes the machines themselves more flexible and powerful, it also demands increased flexibility from the software that runs on them. For example, the software will need the ability to dynamically change how work is distributed among processors depending on what kind and how many a specific supercomputer has. Without this ability, the software will run at the same speed no matter how big, fast, or powerful the computer is: as if it only knows how to work with a team of 5 despite having a team of 20. Hence, EXCELLERAT is preparing engineering simulation software to adapt to working efficiently on any given machine. This kind of simulation software is making it possible to more rapidly design new airplanes for characteristics like a shape that has less drag/better fuel efficiency, less sound pollution, and easier recycling of materials when the plane is too old to use.

Another CoE using HPC efficiency to make our world more sustainable is the Centre of Excellence for Combustion (CoEC). Focused exclusively on combustion simulation, they are working to discover new non-carbon or low-carbon fuels and more sustainable ways of burning them. Until now, the primary barrier to this kind of research has been the computing limitations of HPC systems, which could not support realistically detailed simulations. Only with the capacity of the latest and future machines will researchers finally be able to run simulations accurate enough for practical advances.

Outside of the pursuit for more sustainable combustion, the Energy Oriented Centre of Excellence (EoCoE) is boosting the efficiency of entirely different energy sources. In the realm of Wind for Energy, their simulations designed for the latest HPC systems have boosted the size of simulated wind farms from 5 to 40 square kilometres, which allows researchers and industry to far better understand the impact of land terrain and wind turbine placement. They are also working outside of established wind energy technology to help design an entirely new kind of wind turbine.

In work also related to solar energy, the EoCoE Materials for Energy group is finding new materials to improve the efficiency of solar cells as well as separately working on materials to harvest energy from the mixture of salt and fresh water in estuaries. Meanwhile, the Water for Energy group is improving the modelling of ground water movement to enable more efficient positioning of geothermal wells and the Fusion for Energy group is working to improve the accuracy of models to predict fusion energy output.

EoCoE is also developing simulations to support Meteorology for Energy including the ability to predict wind and solar power capacity in Europe. Unlike our normal daily forecast, energy forecasts need to calculate the impact of fog or cloud thickness on solar cells and wind fluctuations caused by extreme temperature shifts or storms on wind turbines. Without this more advanced form of weather forecasting, it is unfeasible for these renewable but variable energy sources to make up a large amount of the power supplied to our fluctuation sensitive grids. Before we are able to rely on wind and solar power, it will be essential to predict renewable energy output in time to make changes or supplement with alternate energy sources, especially in light of the previously mentioned increase in extreme weather events.

Suffice it to say that climate change poses a variety of enormous challenges. The above describes only some of the work EU HPC CoEs are already doing and none of what they may be able to do in the future! For instance, HiDALGO also has a migration modelling program currently designed to help policy makers divert resources most effectively to migrations caused by conflict. However, similar principles could theoretically be employed in combination with weather modelling like that done by ESiWACE to create a climate migration model. Where expertise meets collaboration, the possibilities are endless! Make sure to follow the links above and our social media handles below to stay up to date on EU HPC CoE activities.

FocusCoE at EuroHPC Summit Week 2022

With the support of the FocusCoE project, almost all European HPC Centres of Excellence (CoEs) participated once again in the EuroHPC Summit Week (EHPCSW) this year in Paris, France: the first EHPCSW in person since 2019’s event in Poland. Hosted by the French HPC agency Grand équipement national de calcul intensif (GENCI), the conference was organised by Partnership for Advanced Computing in Europe (PRACE), the European Technology Platform for High-Performance Computing (ETP4HPC), The EuroHPC Joint Undertaking (EuroHPC JU), and the European Commission (EC).As usual, this year’s event gathered the main European HPC stakeholders from technology suppliers and HPC infrastructures to scientific and industrial HPC users in Europe.

At the workshop on the European HPC ecosystem on Tuesday 22 March at 14:45, where the diversity of the ecosystem was presented around the Infrastructure, Applications, and Technology pillars, project coordinator Dr. Guy Lonsdale from Scapos talked about FocusCoE and the CoEs’ common goal.

Later that day from 16:30 until 18:00h, the FocusCoE project hosted a session titled “European HPC CoEs: perspectives for a healthy HPC application eco-system and Exascale” involving most of the EU CoEs. The session discussed the key role of CoEs in the EuroHPC application pillar, focussing on their impact for building a vibrant, healthy HPC application eco-system and on perspectives for Exascale applications. As described by Dr. Andreas Wierse on behalf of EXCELLERAT, “The development is continuous. To prepare companies to make good use of this technology, it’s important to start early. Our task is to ensure continuity from using small systems up to the Exascale, regardless of whether the user comes from a big company or from an SME”.

Keen interest in the agenda was also demonstrated by attendees from HPC related academia and industry filling the hall to standing room only. In light of the call for new EU HPC Centres of Excellence and the increasing return to in-person events like EHPCSW, the high interest in preparing the EU for Exascale has a bright future.

FocusCoE Hosts Intel OneAPI Workshop for the EU HPC CoEs

On March 2, 2022 FocusCoE hosted Intel for a workshop introducing the oneAPI development environment. In all, over 40 researchers representing the EU HPC Centres of Excellence (CoEs)were able to attend the single day workshop to gain an overview of OneAPI. The 8 presenters from Intel gave presentations through the day covering the OneAPI vision, design, toolkits, a use case with GROMACS (which is already used by some of the EU HPC CoEs), and specific tools for migration and debugging.

Launched in 2019, the Intel OneAPI cross-industry, open, standards-based unified programming model is being designed to deliver a common developer experience across accelerator architectures. With the time saved designing for specific accelerators, OneAPI is intended to enable faster application performance, more productivity, and greater innovation. As summarized on Intel’s OneAPI website, “Apply your skills to the next innovation, and not to rewriting software for the next hardware platform.” Given the work that EU HPC CoEs are currently doing to optimise codes for Exascale HPC systems, any tools that make this process faster and more efficient can only boost CoEs capacity for innovation and preparedness for future heterogeneous systems.

The OneAPI industry initiative is also encouraging collaboration on the oneAPI specification and compatible oneAPI implementations. To that end, Intel is investing time and expertise into events like this workshop to give researchers the knowledge they need not only to use but help improve OneAPI. The presenters then also make themselves available after the workshop to answer questions from attendees on an ongoing basis. Throughout our event, participants were continuously able to ask questions and get real-time answers as well as offers for further support from software architects, technical consulting engineers, and the researcher who presented a use case. Lastly, the full video and slides from presentations are available below for any CoEs who were unable to attend or would like a second look at the detailed presentations.

NAFEMS 2021 World Congress

In the last week of October, nearly 950 participants from industry and academia gathered for the International Association for the Engineering Modeling, Analysis and Simulation 2021 World Congress (NWC21). Originally planned as a hybrid event, all programming unfortunately had to be shifted online. However, this year’s Congress was also held in conjunction with the 5th International SPDM (Simulation Process & Data Management) Conference, the NAFEMS Multiphysics Simulation Conference, and a dedicated Automotive CAE Symposium.

Unsurprisingly, the online and truly globally accessible conference also attracted a record breaking over 550 abstract submissions. Among those accepted, EXCELLERAT and POP Centres of Excellence gave five 20-minute presentations ranging from improving airplane simulations to improving the new user HPC experience. Throughout the week Monday – Thursday, representatives from EXCELLERAT, POP, and FocusCoE also presented more general videos and materials on current projects at their 3D interactive virtual booth. This year, we welcomed over 100 visitors to the booth, and the completely new NAFEMS World of Engineering Simulation 3D experience had up to 600 conference participants strolling the virtual exhibition hall at any given time.

Beginning on Tuesday the 26th, Ricard Borrell presented Airplane Simulations on Heterogeneous Pre-Exascale Architectures on behalf of EXCELLERAT CoE. He used the example of improved airplane aerodynamics simulations to discuss how their Alya code is preparing researchers to fully utilise the next generation of Exascale HPC systems and their heterogeneous hardware architectures. Without codes optimised like Alya, current HPC codes wouldn’t be capable of running in parallel on different types of processors to the extent that new Exascale systems will require. Thus, they would use only a fraction of the speed and processing power available at Exascale.

Continuing in this theme, Amgad Dessoky presented an overview of how the EXCELLERAT Centre of Excellence is Paving the Way for the Development to Exascale Multiphysics Simulations including the 6 computational codes they are optimizing for Exascale: Nek5000, Alya, AVBP, TPLS, FEniCS, and Coda. Using automotive, aerospace, energy, and manufacturing industrial sector use cases, he described the common challenges for developers and potential users of HPC Exascale applications. Participants were also invited to discuss how EXCELLERAT can best support the needs and required competencies of the Multiphysics simulation community going forward.

One of these required competencies is the ability to understand and improve the performance bottlenecks of parallel codes. In answer, Federico Panichi presented a talk titled Improving the Performance of Engineering Codes on behalf of the Performance Optimisation and Productivity (POP) Centre of Excellence. The set of hierarchical metrics forming the POP performance assessment methodology cuts out the time-consuming trial and error process of code optimisation by identifying issues such as memory bottlenecks, communication inefficiencies, and load imbalances. He demonstrated with the example of optimised engineering codes how POP services could enable any EU or UK academic or commercial organisation to speed up time to solution, solve larger, more challenging problems or reduce compute costs free of charge.

On Wednesday the 27th, presentations continued with Christian Gscheidle representing EXCELLERAT and A Flexible and Efficient In-situ Data Analysis Framework for CFD Simulations. Because Computational Fluid Dynamics (CFD) simulations Increasingly produce far more data than they can save in real time, researchers often see final analysis results and lose the intermediate data. It also means that they must wait until the full simulation runs before being able to make any improvements. In contrast, he presented the EXCELLERAT tool that uses machine learning to perform in-situ analyses on data produced during large-scale simulations in real time so that researchers can see intermediate results and early trends. Using this tool, an example HVAC duct from an automotive set up use case showed a reduced compute time because researchers were able to abort simulations with unwanted behaviour.

Wrapping up the presentations, Janik Schüssler presented Creating Connections: Enabling High Performance Computing for Industry through a Data Exchange & Workflow Platform on behalf of EXCELLERAT. Continuing in the theme of the previous presentations, this tool addresses the needs and competencies of current and potential HPC users, albeit in a slightly different way. As discussed in the talk, where other tools or trainings work to develop HPC competencies in the user, this prototype platform would bring HPC to the user both in terms of competencies and location. The user would be able to remotely access clusters (currently both HLRS Hawk and Vulcan) from anywhere using an authorised device. Additionally, they would be able to run simulations in the web front end without any command line interactions, which can have a steep learning curve for new users. Ultimately, the savings in logistical coordination, travel, and training time would drastically lower the entry barrier to HPC use.

If you’re interested in following how EXCELLERAT, POP, and all the European HPC Centres of Excellence are preparing us for Exascale and improving the HPC user experience, sign up for our newsletters:

Focus CoE Newsletter (for coverage of all European HPC CoEs)

Excellerat Success Story: Bringing industrial end-users to Exascale computing: An industrial level combustion design tool on 128K cores

CoE involved:

Success story # Highlights:

- Exascale

- Industry sector: Aerospace

- Key codes used: AVBP

Organisations & Codes Involved:

CERFACS is a theoretical and applied research centre, specialised in modelling and numerical simulation. Through its facilities and expertise in High Performance Computing (HPC), CERFACS deals with major scientific and technical research problems of public and industrial interest.

GENCI (Grand Equipement National du Calcul Intensif) is the French High-Performance Computing (HPC) National Agency in charge of promoting and acquiring HPC, Artificial Intelligence (AI) capabilities, and massive data storage resources.

Cellule de Veille technologique GENCI (CVT) is an interest group focused on technology watch in High Performance Computing (HPC) pulling together French public research, CEA, INRIA among Others. It offers first time access to novel architectures and access to technical support towards preparing the codes for the near future of HPC.

AVBP is a parallel Computational Fluid Dynamics (CFD) code that solves the three-dimensional compressible Navier-Stokes on unstructured and hybrid grids. Its main area of application is the modelling of unsteady reacting flows in combustor configurations and turbomachines. The prediction of unsteady turbulent flows is based on the Large Eddy Simulation (LES) approach that has emerged as a prospective technique for problems associated with time dependent phenomena and coherent eddy structures.

CHALLENGE:

Some physical processes like soot formation are so CPU intensive and non deterministic that their predictive modelling is out of reach today, limiting our insights to ad hoc correlations, and preliminary assumptions. Moving these runs to Exascale level will allow simulation longer by orders of magnitudes, achieving the compulsory statistical convergence required for a design tool.

The complexity at the code level to unlock node level and system level performance is challenging and requires code porting, optimisation and algorithm refactoring on various architectures in the way to enable Exascale performance.

SOLUTION:

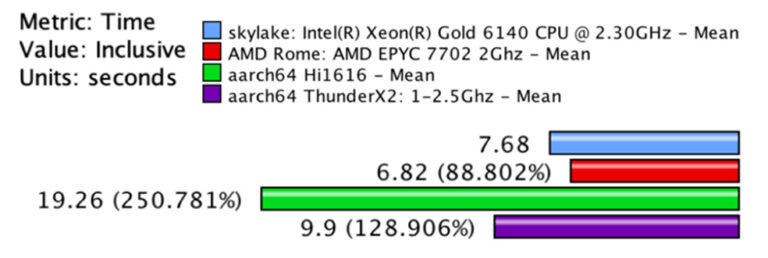

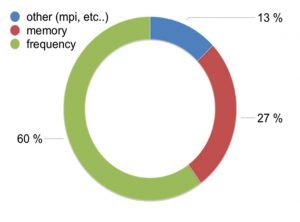

In order to prepare the AVBP code to architectures that were not available at the start of the EXCELLERAT project, CERFACS teamed up with the CVT from GENCI, Arm, AMD and the EPI project to port, benchmark and (when possible) optimise the AVBP code for Arm and AMD architectures. This collaboration ensures an early access to these new architectures and prime information to prepare our codes for the wide spread availability of systems equipped with these processors. The AVBP developers got access to the IRENE AMD system of PRACE at TGCC with support from AMD and Atos, which allowed to characterise the application on this architecture and create a predictive model on how the different features of the processor (frequency, bandwidth) could affect the performance of the code. They were also able to port the code to flavors of Arm processors singling out compiler dependency and identify performance bottlenecks to be investigated before large systems become available in Europe.

Business impact:

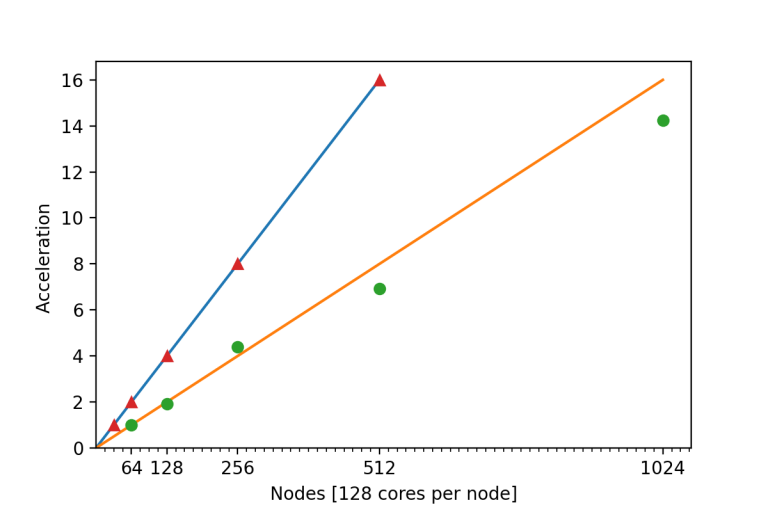

The AVBP code was ported and optimised for the TGCC/GENCI/PRACE system Joliot-CURIE ROME with excellent strong and weak scaling performance up to 128,000 cores and 32,000 cores respectively. These optimisations impacted directly four PRACE projects on this same system on the following call for proposals.

Beside AMD processors, the EPI project and GENCI’s CVT as well as EPCC (EXCELLERAT’s partner) provided access to Arm based clusters respectively based on Marvell ThunderX2 (Inti Prototype hosted and operated by CEA) and Hi1616 (early silicon from Huawei) architectures. This access provided important feedback on code portability using the Arm and gcc compilers, single processor and strong scaling performance up to 3072 cores.

Results from this collaboration have been included on the Arm community blog [1]. A white paper on this collaboration is underway with GENCI and CORIA CNRS.

Benefits for further research:

- Code ready for the wide spread access of the Rome Architecture.

- Strong and weak scaling measurements up to 128,000 cores

- Initial optimisations for Arm architectures

Excellerat Success Story: Enabling High Performance Computing for Industry through a Data Exchange & Workflow Portal

CoE involved:

Success story # Highlights:

- Keywords:

- Data Transfer

- Data Management

- Data Reduction

- Automatisation, Simplification

- Dynamic Load Balancing

- Dynamic Mode Decomposition

- Data Analytics

- combustor design

- Industry sector: Aeronautics

- Key codes used: Alya

Organisations & Codes Involved:

As an IT service provider, SSC-Services GmbH develops individual concepts for cooperation between companies and customer-oriented solutions for all aspects of digital transformation. Since 1998, the company, based in Böblingen (Germany), has been offering solutions for the technical connection and cooperation of large companies and their suppliers or development partners. The corporate roots lie in the management and exchange of data of all types and sizes.

RWTH Aachen University is the largest technical university of Germany. The Institute of Aerodynamics of RWTH Aachen University possesses extensive expertise in the simulation of turbulent flows, aeroacoustics and high-performance computing. For more than 18 years large-eddy simulations with an advanced analysis of the large scale simulation data are successfully performed for various applications.

Barcelona Supercomputing Center (BSC) is the national supercomputing centre in Spain. BSC specialises in High Performance Computing (HPC) and manages MareNostrum IV, one of the most powerful supercomputers in Europe. BSC is at the service of the international scientific community and of industry that requires HPC resources. The Computing Applications for Science and Engineering (CASE) Department from BSC is involved in this application providing the application case and the simulation code for this demonstrator.

The code used for this application is the high performance computational mechanics code Alya from BSC designed to solve complex coupled multi-physics / multi-scale / multi-domain problems from the engineering realm. Alya was specially designed for massively parallel supercomputers, and the parallelization embraces four levels of the computer hierarchy. 1) A substructuring technique with MPI as the message passing library is used for distributed memory supercomputers. 2) At the node level, both loop and task parallelisms are considered using OpenMP as an alternative to MPI. Dynamic load balance techniques have been introduced as well to better exploit computational resources at the node level. 3) At the CPU level, some kernels are also designed to enable vectorization. 4) Finally, accelerators like GPU are also exploited through OpenACC pragmas or with CUDA to further enhance the performance of the code on heterogeneous computers. Alya is one of the only two CFD codes of the Unified European Applications Benchmark Suite (UEBAS) as well as the Accelerator benchmark suite of PRACE.

Technical Challenge:

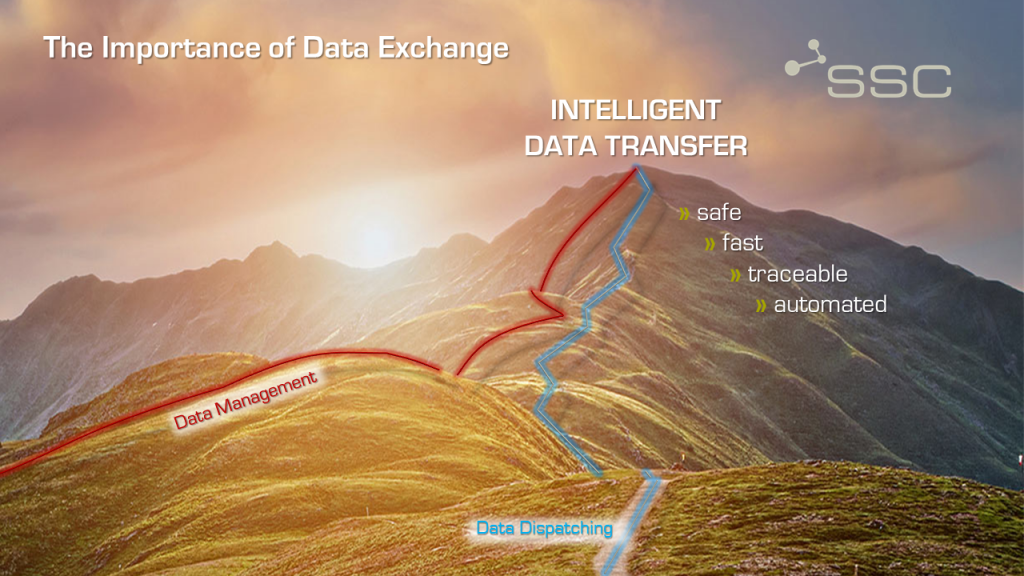

SSC is developing a secure data exchange and transfer platform as part of the EXCELLERAT project to facilitate the use of high-performance computing (HPC) for industry and to make data transfer more efficient. Today, organisations and smaller industry partners face various problems in dealing with HPC calculations, HPC in general, or even access to HPC resources. In many cases, the calculations are complex and the potential users do not have the necessary expertise to fully exploit HPC technologies without support. The developed data platform will be able to simplify or, at best, eliminate these obstacles.

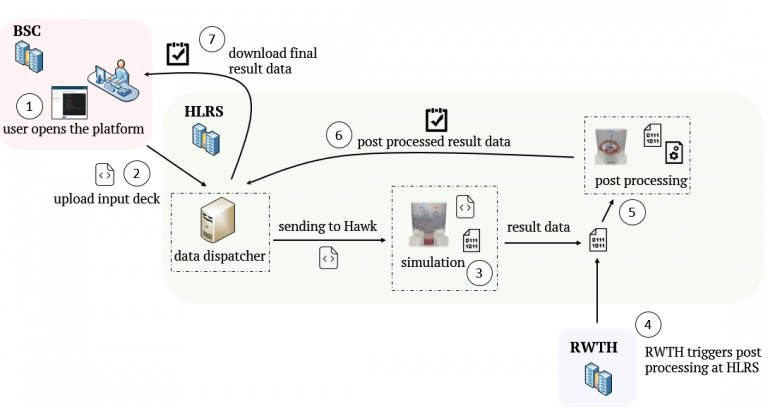

The data roundtrip starts with a user at the Barcelona Supercomputing Center that wants to simulate the Alyafiles. Therefore, the user uploads the corresponding files through the data exchange and workflow platform and selects Hawk at HLRS as a simulation target. After the files have been simulated, RWTH Aachen starts the post-processing process at HLRS. In the end the user downloads the post processed data through the platform.

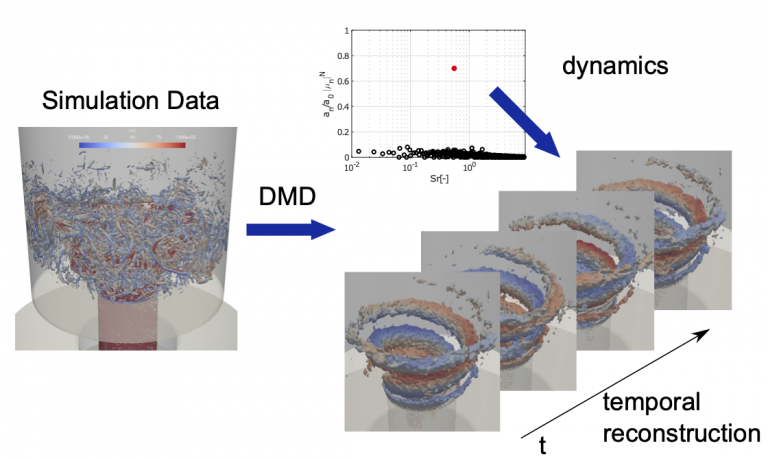

With the increasing availability of computational resources, high-resolution numerical simulations have become an indispensable tool in fundamental academic research as well as engineering product design. A key aspect of the engineering workflow is the reliable and efficient analysis of the rapidly growing high-fidelity flow field data. RWTH develops a modal decomposition toolkit to extract the energetically and dynamically important features, or modes, from the high-dimensional simulation data generated by the code Alya. These modes enable a physical interpretation of the flow in terms of spatio-temporal coherent structures, which are responsible for the bulk of energy and momentum transfer in the flow. Modal decomposition techniques can be used not only for diagnostic purposes, i.e. to extract dominant coherent structures, but also to create a reduced dynamic model with only a small number of degrees of freedom that approximates and anticipates the flow field. The modal decomposition will be executed on the same architecture as the main simulation. Besides providing better physical insights, this will reduce the amount of data that needs to be transferred back to the user.

Scientific Challenge:

Highly accurate, turbulence scale resolving simulations, i.e. large eddy simulations and direct numerical simulations, have become indispensable for scientific and industrial applications. Due to the multi-scale character of the flow field with locally mixed periodic and stochastic flow features, the identification of coherent flow phenomena leading to an excitation of, e.g., structural modes is not straightforward. A sophisticated approach to detect dynamic phenomena in the simulation data is a reduced-order analysis based on dynamic mode decomposition (DMD) or proper orthogonal decomposition (POD).

In this collaborative framework, DMD is used to study unsteady effects and flow dynamics of a swirl-stabilised combustor from high-fidelity large-eddy simulations. The burner consists of plenum, fuel injection, mixing tube and combustion chamber. Air is distributed from the plenum into a radial swirler and an axial jet through a hollow cone. Fuel is injected into a plenum inside the burner through two ports that contain 16 injection holes of 1.6 mm diameter located on the annular ring between the cone and the swirler inlets. The fuel injected from the small holes at high velocity is mixed with the swirled air and the axial jet along a mixing tube of 60 mm length with a diameter of D = 34 mm. At the end of the mixing tube, the mixture expands over a step change with a diameter ratio of 3.1 into a cylindrical combustion chamber. The burner operates at Reynolds number Re = 75,000 with pre-heated air at T_air = 453 K and hydrogen coming at T_H2 = 320 K. The numerical simulations have been conducted on a hybrid unstructured mesh including prisms, tetrahedrons and pyramids, and locally refined in the regions of interest with a total of 63 million cells.

SOLUTION:

The developed Data Exchange & Workflow Portal will be able to simplify or even eliminate these obstacles. First activities have already started. The new platform enables users to easily access the two HLRS clusters, Hawk and Vulcan, from any authorised device and to run their simulations remotely. The portal provides relevant HPC processes for the end users, such as uploading input decks, scheduling workflows, or running HPC jobs.

In order to be able to perform data analytics, i.e. modal decomposition, of the large amounts of data that arise from Exascale simulations, a modal decomposition toolkit has been developed. An efficient and scalable parallelisation concept based on MPI and LAPACK/ScaLAPACK has been used to perform modal decompositions in parallel on large data volumes. Since DMD and POD are data-driven decomposition techniques, the time resolved data has to be read for the whole time interval to be analysed. To handle the large amount of input and output, the software tool has been optimised to effectively read and write the time resolved snapshot data parallelly in time and space. Since different solution data formats are utilised by the computational fluid dynamics community, a flexible modular interface has been developed to easily add data formats of other simulation codes.

The flow field of the investigated combustor exhibits a self-excited flow oscillation known as a precessing vortex core (PVC) at a dimensionless Strouhal Number of Sr=0.55, which can lead to inefficient fuel consumption, high level of noise and eventually combustion hardware damage. To analyse the dynamics of the PVC, DMD is used to extract the large-scale coherent motion from the turbulent flow field characterised by a manifold of different spatial and temporal scales shown in Figure 2 (left). The instantaneous flow field of the turbulent flame is visualised by an iso-surface of the Q-criterion coloured by the absolute velocity. The DMD analysis is performed on the three-dimensional velocity and pressure field using 2000 snapshots. The resulting spectrum of the DMD, showing the amplitude of each mode as a function of the dimensionless frequency is given in Figure 2 (top). One dominant mode, marked by a red dot, at Sr=0.55 matching the dimensionless frequency of the PVC is clearly visible. The temporal reconstruction of the extracted DMD mode showing the extracted PVC vortex is illustrated in Figure 2 (right). It shows the iso-surface of the Q-criterion coloured by the radial velocity.

Scientific impact of this result:

The Data Exchange & Workflow Portal is a mature solution for providing seamless and secure access to high-performance computing resources by end users. The innovative thing about the solution is that it combines the know-how about secure data exchange with an HPC platform. This is fundamental because the combination of know-how provision and secure data exchange between HPC and SMEs is unique. Especially the web interface is very easy to use and many tasks are automated, which leads to a simplification of the whole HPC complex and there is an easier entry for HPC engineers.

In addition, the data reduction programming technology ensures a more intelligent, faster transfer of files. There will be a highly increased performance speed when transferring the same data sets over and over. If the file is already known by the system and there is no need to transfer it again. Only the changed parts need to be exchanged.

The developed data analytics, i.e. modal decomposition, toolkit provides an efficient and user-friendly way to analyse simulation data and extract energetically and dynamically important features, or modes, from complex, high-dimensional flows. To address a broad user community having different backgrounds and expertise in HPC applications, a server/client structure has been implemented allowing an efficient workflow. Using this structure, the actual modal decomposition is done on the server running in parallel on the HPC cluster which is connected via TCP with the graphical user interface (client) running on the local machine. To efficiently visualise the extracted modes and reconstructed flow fields without writing large amounts of data to disk, the modal decomposition server can be connected to a ParaView server/client configuration via Catalyst enabling in-situ visualisation.

Finally, this demonstrator shows an integrated HPC-based solution that can be used for burner design and optimisation using high-fidelity simulations and data analytics through an interactive workflow portal with an efficient data exchange and data transfer strategy.

Benefits for further research:

- Higher HPC customer retention due to less complex HPC environment

- Reduction of HPC complexity due to web frontend

- Shorter training phases for inexperienced users and reduced support effort for HPC centres

- Calculations can be started from anywhere with a secure connection

- Time and cost savings due to a high degree of automation that streamlines the process chain

- Efficient and user-friendly post-processing/ data analytics

CoEs at Teratec Forum 2021 and ISC21

With the support of FocusCoE, a number of HPC CoEs will give short presentations at the virtual PRACE booth in the following two HPC-related events: Teratec Forum 2021 and ISC2021 that will take place towards the end of this month. See the schedule below for more details. Please reserve the slots in your calendars, registration details will be provided on the PRACE website soon!

“We are happy to see that FocusCoE was able to help the HPC CoEs to have a significant presence at this year’s editions of ISC and Teratec Forum, two major HPC events, enabled through our good synergies with PRACE”, says Guy Lonsdale, FocusCoE coordinator.

Teratec Forum 2021 schedule

Date / Event | Time slot CEST | Title | Speaker | Organisation |

Tue 22 June | 11:00 – 11:15 | EoCoE-II: Towards exascale for Energy | Edouard Audit, EoCoE-II coordinator | CEA (France) |

| 14:30 – 14:45 | POP CoE: Free Performance Assessments for the HPC Community | Bernd Mohr | Jülich Supercomputing Centre |

Thu 24 June | 13:45 – 14:00 | EXCELLERAT – paving the way for the evolution towards Exascale | Amgad Dessoky / Sophia Honisch | HLRS |

ISC 2021 schedule

Date / Event | Time slot CEST | Title | Speaker | Organisation |

Thu 24 June | 13:45 – 14:00 | EXCELLERAT – paving the way for the evolution towards Exascale | Amgad Dessoky / Sophia Honisch | HLRS |

Fri 25 June | 11:00 – 11:15 | The Center of Excellence for Exascale in Solid Earth (ChEESE) | Alice-Agnes Gabriel | Geophysik, University of Munich |

| 15:30 – 15:45 | EoCoE-II: Towards exascale for Energy | Edouard Audit, EoCoE-II coordinator | CEA (France) |

Tue 29 June | 11:00 – 11:15 | Towards a maximum utilization of synergies of HPC Competences in Europe | Bastian Koller, HLRS | HLRS |

Wed 30 June | 10:45 -11:00 | CoE | Dr.-Ing. Andreas Lintermann | Jülich Supercomputing Centre, Forschungszentrum Jülich GmbH |

Thu 1 July | 11:00 -11:15 | POP CoE: Free Performance Assessments for the HPC Community | Bernd Mohr | Jülich Supercomputing Centre |

| 14:30 -14:45 | TREX: an innovative view of HPC usage applied to Quantum Monte Carlo simulations | Anthony Scemama (1), William Jalby (2), Cedric Valensi (2), Pablo de Oliveira Castro (2) | (1) Laboratoire de Chimie et Physique Quantiques, CNRS-Université Paul Sabatier, Toulouse, France (2) Université de Versailles St-Quentin-en-Yvelines, Université Paris Saclay, France |

Please register to the short presentations through the PRACE event pages here:

| PRACE Virtual booth at Teratec Forum 2021 | PRACE Virtual booth at ISC2021 |

| prace-ri.eu/event/teratec-forum-2021/ | prace-ri.eu/event/praceisc-2021/ |

List of innovations by the CoEs, spotted by the EU innovation radar

| Title: Biobb, biomolecular modelling building Blocks |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF MANCHESTER – UNITED KINGDOM |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| FUNDACIO INSTITUT DE RECERCA BIOMEDICA (IRB BARCELONA) – SPAIN |

| Title: GROMACS, a versatile package to perform molecular dynamics |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| Title: HADDOCK, a versatile information-driven flexible docking approach for the modelling of biomolecular complexes |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| UNIVERSITEIT UTRECHT – NETHERLANDS |

| Title: PMX, a versatile (bio-) molecular structure manipulation package |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| Title: Faster Than Real Time (FTRT) environment for high-resolution simulations of earthquake generated tsunamis |

| Market maturity: Tech ready |

| Market creation potential: High |

| Project: ChEESE |

| Innovation Topic: Excellent Science |

| UNIVERSIDAD DE MALAGA – SPAIN |

| TECHNISCHE UNIVERSITAET MUENCHEN – GERMANY |

| ISTITUTO NAZIONALE DI GEOFISICA E VULCANOLOGIA – ITALY |

| Title: Probabilistic Seismic Hazard Assessment (PSHA) |

| Market maturity: Exploring |

| Project: ChEESE |

| Innovation Topic: Excellent Science |

| LUDWIG-MAXIMILIANS-UNIVERSITAET MUENCHEN – GERMANY |

| TECHNISCHE UNIVERSITAET MUENCHEN – GERMANY |

| ISTITUTO NAZIONALE DI GEOFISICA E VULCANOLOGIA – ITALY |

| Title: Urgent Computing services for the impact assessment in the immediate aftermath of an earthquake |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: ChEESE |

| Innovation Topic: Excellent Science |

| EIDGENOESSISCHE TECHNISCHE HOCHSCHULE ZUERICH – SWITZERLAND |

| BULL SAS – FRANCE |

| Title: Alya, HemeLB, HemoCell, OpenBF, Palabos-Vertebroplasty simulator, Palabos – Flow Diverter Simulator, BAC, HTMD, Playmolecule, Virtual Assay, CT2S, Insigneo Bone Tissue Suit |

| Market maturity: Exploring |

| Project: CompBioMed |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| UNIVERSITY COLLEGE LONDON – UNITED KINGDOM |

| ACELLERA LABS SL – SPAIN |

| Title: E-CAM software repository |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE – SWITZERLAND |

| UNIVERSITY COLLEGE DUBLIN, NATIONAL UNIVERSITY OF IRELAND, DUBLIN – IRELAND |

| UNITED KINGDOM RESEARCH AND INNOVATION – UNITED KINGDOM |

| Title: E-CAM Training offer on effective use of HPC simulations in quantum chemistry |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE – SWITZERLAND |

| UNIVERSITY COLLEGE DUBLIN, NATIONAL UNIVERSITY OF IRELAND, DUBLIN – IRELAND |

| FREIE UNIVERSITAET BERLIN – GERMANY |

| Title: Improved Simulation Software Packages for Molecular Dynamics |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| SCIENCE AND TECHNOLOGY FACILITIES COUNCIL – UNITED KINGDOM |

| TECHNISCHE UNIVERSITAET WIEN – AUSTRIA |

| UNIVERSITEIT VAN AMSTERDAM – NETHERLANDS |

| Title: Improved software modules for Meso– and multi–scale modelling |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| FREIE UNIVERSITAET BERLIN – GERMANY |

| Title: Code auditing, optimization and performance assessment services for energy-oriented HPC simulations |

| Market maturity: Exploring |

| Project: EoCoE |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMANY |

| Title: Consultancy Services for using HPC Simulations for Energy related applications |

| Market maturity: Exploring |

| Project: EoCoE |

| Innovation Topic: Excellent Science |

| COMMISSARIAT A L ENERGIE ATOMIQUE ET AUX ENERGIES ALTERNATIVES – FRANCE |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMANY |

| Table: New coupled earth system model |

| Market maturity: Tech Ready |

| Project: ESiWACE |

| Innovation Topic: Excellent Science |

| BULL SAS – FRANCE |

| MET OFFICE – UNITED KINGDOM |

| EUROPEAN CENTRE FOR MEDIUM-RANGE WEATHER FORECASTS – UNITED KINGDOM |

| Title:AMR capability and Accelerated Computing in AVBP code |

| Market maturity: Exploring |

| Project: EXCELLERAT |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| CERFACS CENTRE EUROPEEN DE RECHERCHE ET DE FORMATION AVANCEE EN CALCUL SCIENTIFIQUE SOCIETE CIVILE – FRANCE |

| Title: AMR capability in Alya code to enable advanced simulation services for engineering |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: Data Exchange & Workflow Portal: secure, fast and traceable online data transfer between data generators and HPC centers |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: FPGA Acceleration for Exascale Applications based on ALYA and AVBP codes |

| Market maturity: Tech Ready |

| Market creation potential: Noteworthy |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: Open source mode decomposition toolkit for exascale data analysis |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| RHEINISCH-WESTFAELISCHE TECHNISCHE HOCHSCHULE AACHEN – GERMANY |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| SSC SERVICES GMBH – GERMANY |

| Title:Use of GASPI to improve performance of CODA |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: GPU acceleration in TPLS to exploit the GPU-based architectures for Exascale |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| Title: In-Situ Analysis of CFD Simulations |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMAN |

| Title: Interactive in situ visualization in VR |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| UNIVERSITY OF STUTTGART – GERMANY |

| Title: Machine Learning Methods for Computational Fluid Dynamics (CFD) Data |

| Market maturity: Tech Ready |

| Market creation potential: Noteworthy |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMAN |

| Title: Parallel I/O in TPLS using PETSc library |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| RHEINISCH-WESTFAELISCHE TECHNISCHE HOCHSCHULE AACHEN – GERMANY |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| Title: Highly scalable Material Science Simulation Codes |

| Market maturity: Exploring |

| Project: MaX |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| E4 COMPUTER ENGINEERING SPA – ITALY |

| Title: Quantum Simulation as a Service |

| Market maturity: Exploring |

| Market creation potential: Noteworthy |

| Project: MaX |

| Innovation Topic: Excellent Science |

| EIDGENOESSISCHE TECHNISCHE HOCHSCHULE ZUERICH – SWITZERLAND |

| CINECA CONSORZIO INTERUNIVERSITARIO – ITALY |

| Title: Simulation Code Optimisation and Scaling |

| Market maturity: Exploring |

| Project: MaX |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| EIDGENOESSISCHE TECHNISCHE HOCHSCHULE ZUERICH – SWITZERLAND |

| Title: Novel Materials Discovery (NOMAD) Repository |

| Market maturity: Business Ready |

| Project: NoMaD |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| HUMBOLDT-UNIVERSITAET ZU BERLIN – GERMANY |

| BAYERISCHE AKADEMIE DER WISSENSCHAFTEN – GERMANY |

| Title: NOMAD Encyclopedia Service: allows users to see, compare, explore, and understand computed materials data |

| Market maturity: Tech Ready |

| Project: NoMaD |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| HUMBOLDT-UNIVERSITAET ZU BERLIN – GERMANY |

| Title: An ICT platform prototype for systematically tracing public sentiment, its evolution towards a policy, its components and arguments linked to them. |

| Market maturity: Tech Ready |

| Project: NOMAD |

| Innovation Topic: Smart & Sustainable Society |

| ATHENS TECHNOLOGY CENTER ANONYMI BIOMICHANIKI EMPORIKI KAI TECHNIKI ETAIREIA EFARMOGON YPSILIS TECHNOLOGIAS – GREECE |

| NATIONAL CENTER FOR SCIENTIFIC RESEARCH “DEMOKRITOS” – GREECE |

| CRITICAL PUBLICS LTD – UNITED KINGDOM |

| Title: Customer-Specific Performance Analysis for Parallel Codes |

| Market maturity: Exploring |

| Project: POP |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| UNIVERSITAET STUTTGART – GERMANY |

| Title: Repository of powerful tools (codes, pattern/best-practice descriptions, experimental results) for the HPC community |

| Market maturity: Exploring |

| Project: POP |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| TERATEC – FRANCE |

Video Of The Week: EXCELLERAT Use Case Simulations

Watch The Presentations Of The First CoE Joint Technical Workshop

Watch the recordings of the presentations from the first technical CoE workshop. The virtual event was organized by the three HPC Centres of Excellence ChEESE, EXCELLRAT and HiDALGO. The agenda for the workshop was structured in these four session:

Session 1: Load balancing

Session 2: In situ and remote visualisation

Session 3: Co-Design

Session 4: GPU Porting

You can also download a PDF version for each of the recorded presentations. The workshop took place on January 27 – 29, 2021.

Session 1: Load balancing

Title: Introduction by chairperson

Speaker: Ricard Borell (BSC)

Title: Load balancing strategies used in AVBP

Speaker: Gabriel Staffelbach (CERFACS)

Title: Addressing load balancing challenges due to fluctuating performance and non-uniform workload in SeisSol and ExaHyPE

Speaker: Michael Bader (TUM)

Title: On Discrete Load Balancing with Diffusion Type Algorithms

Speaker: Robert Elsäßer (PLUS)

Session 2: In situ and remote visualisation

Title: Introduction by chairperson

Speaker: Lorenzo Zanon & Anna Mack (HLRS)

Title: An introduction to the use of in-situ analysis in HPC

Speaker: Miguel Zavala (KTH)

Title: In situ visualisation service in Prace6IP

Speaker: Simone Bnà (CINECA)

Title: Web-based Visualisation of air pollution simulation with COVISE

Speaker: Anna Mack (HLRS)

Title: Virtual Twins, Smart Cities and Smart Citizens

Speaker: Leyla Kern, Uwe Wössner, Fabian Dembski (HLRS)

Session 3: Co-Design

Title: Introduction by chairperson, and Excellerat’s Co-Design Methodology

Speaker: Gavin Pringle (EPCC)

Title: Accelerating codes on reconfigurable architectures

Speaker: Nick Brown (EPCC)

Title: Benchmarking of Current Architectures for Improvements

Speaker: Nikela Papadopoulou (ICCS)

Title: Example Co-design Approach with the Seissol and Specfem3D Practical cases

Speaker: Georges-Emmanuel Moulard (ATOS)

Title: Exploitation of Exascale Systems for Open-Source Computational Fluid Dynamics by Mainstream Industry

Speaker: Ivan Spisso (CINECA)

Session 4: GPU Porting

Title: Introduction

Speaker: Giorgio Amati (CINECA)

Title: GPU Porting and strategies by ChEESE

Speaker: Piero Lanucara (CINECA)

Title: GPU porting by third party library

Speaker: Simone Bnà (CINECA)