Optimization of Earth System Models on the path to the new generation of Exascale high-performance computing systems

A Use Case by

Short description

In recent years, our understanding of climate prediction has significantly grown and deepened. This is being facilitated by improvements of our global Earth System Models (ESMs). These models aim for representing our future climate and weather ever more realistically, reducing uncertainties in these chaotic systems and explicitly calculating and representing features that were previously impossible to resolve with models of coarser resolutions.

A new generation of exascale supercomputers and massive parallelization are needed in order to calculate small-scale processes and features using high resolution climate and weather models.However, the overhead produced by the new massive parallelization will be dramatic, and new high performance computing techniques will be required to rise to the challenge. These new HPC techniques will enable scientists to make efficient use of upcoming exascale machines, and to set up ultra-high resolution experiment configurations of ESMs and run the respective simulations. Such experiment configurations will be used to predict climate change over the next decades, and to study extreme events like hurricanes.

Results & Achievements

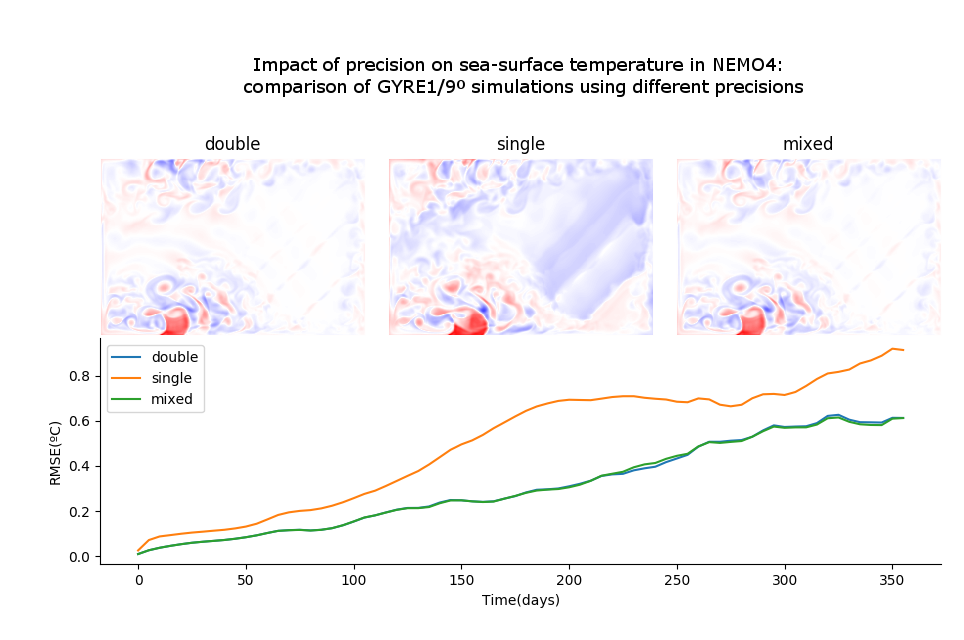

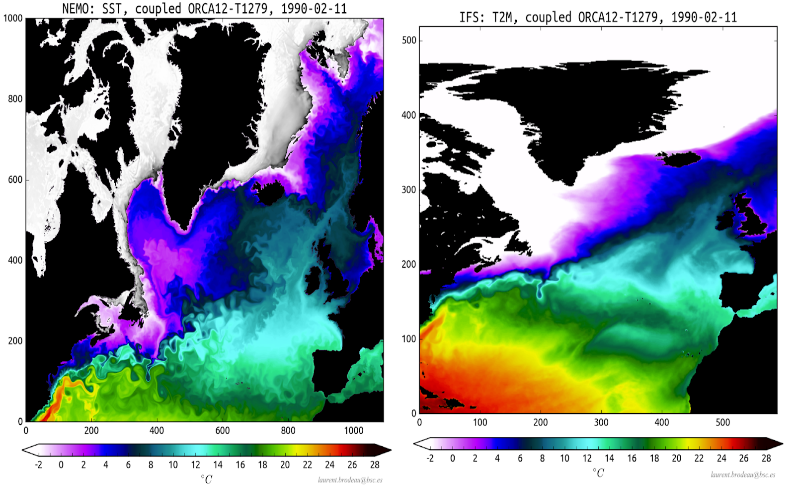

The new EC-Earth version in development is being tested for the main components (OpenIFS and NEMO) on Marenostrum IV, using a significant number of cores to test the new ultra-high resolutions of 10 km in the horizontal domain, using up to 2048 nodes (98,304 cores) for the NEMO component and up to 1024 nodes (49,152 cores) for the OpenIFS component.Different optimizations (developed in the framework of the projects ESiWACE and ESiWACE2) included in these components have been tested to evaluate the computational efficiency achieved. For example, the OpenIFS version including the new integrated parallel I/O allows for an output of hundreds of Gigabytes, while the execution time increases only by 2% compared to the execution without I/O. This is much better than the previous version, which produced an overhead close to 50%. Moreover, this approach will allow for using the same I/O server for both components, facilitating more complex computations online and using a common file format (netCDF).Preliminary results using the new mixed precision version integrated in NEMO have shown an improvement of almost 40% in execution time, without any loss of accuracy in the simulation results.

Objectives

EC-Earth is one such model system, and it is being used in 11 different countries and by up to 24 meteorological or academic institutions to produce reliable climate predictions and climate projections. It is composed of different components, with the atmospheric model OpenIFS and the ocean model NEMO being the most important ones.EC-Earth is one of the ESMs that suffer from a lack of scalability when using higher resolutions, with an urgent need for improvements in capability and capacity on the path to exascale. Our main goal is achieving a good scalability of EC-Earth using resolutions of up to 10 km of horizontal spatial resolution with extreme parallelization. In order to achieve this, different objectives are being pursued: (1) The computational profiling analysis of EC-Earth. Analysing the most severe bottlenecks of the main components when extreme parallelization is being used.(2) Trying to exploit high-end architectures efficiently, reducing the energy consumption of the model to achieve a minimum efficiency in order to be ready for the new hardware. For this purpose, different High Performance Computing techniques are being applied, for example the integration of a full parallel in- and output (I/O), or the reduction in precision of some variables used by the model, maintaining the same accuracy in the results while improving the final execution time of the model.(3) Evaluating, if massive parallel execution and the new methods implemented could affect the quality of the simulations or impair reproducibility.

Use Case Owner

Barcelona Supercomputing Center-Centro Nacional de Supercomputación (BSC-CNS)

Mario Acosta: mario.acosta@bsc.es

Kim Serradell: kim.serradell@bsc.es