- Homepage

- >

- Search Page

- > Slider

- >

Mitigating the Impacts of Climate Change: How EU HPC Centres of Excellence Are Meeting the Challenge

“The past seven years are on track to be the seven warmest on record,” according to the World Meteorological Organization. Furthermore, the earth is already experiencing the extreme weather consequences of a warmer planet in the forms of record snow in Madrid, record flooding in Germany and record wildfires in Greece in 2021 alone. Although EU HPC Centres of Excellence (CoEs) help to address current societal challenges like the Covid-19 pandemic, you might wonder, what can the EU HPC CoEs do about climate change? For some CoEs, the answer is fairly obvious. However just as with Covid-19, the contributions of other CoEs may surprise you!

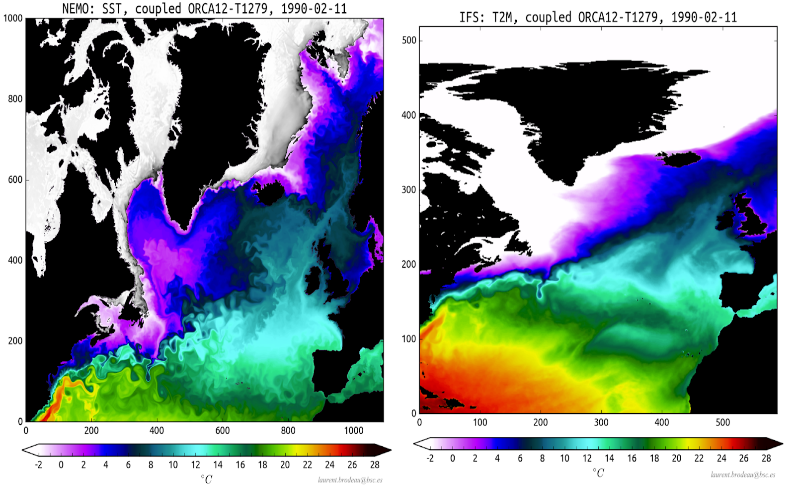

Given that rates of extreme weather events are already increasing, what can EU HPC CoEs do to help today? The Centre of Excellence in Simulation of Weather and Climate in Europe (ESiWACE) is optimizing weather and climate simulations for the latest HPC systems to be fast and accurate enough to predict specific extreme weather events. These increasingly detailed climate models have the capacity to help policy makers make more informed decisions by “forecasting” each decision’s simulated long-term consequences, ultimately saving lives. Beyond this software development, ESiWACE also supports the proliferation of these more powerful simulations through training events, large scale use case collaborations, and direct software support opportunities for related projects.

Even excepting extreme weather and long-term consequences, though, climate change has other negative impacts on daily life. For example the World Health Organization states that air pollution increases rates of “stroke, heart disease, lung cancer, and both chronic and acute respiratory diseases, including asthma.” The HPC and Big Data Technologies for Global Systems Centre of Excellence (HiDALGO) exists to provide the computational and data analytic environment needed to tackle global problems on this scale. Their Urban Air Pollution Pilot, for example, has the capacity to forecast air pollution levels down to two meters based on traffic patterns and 3D geographical information about a city. Armed with this information and the ability to virtually test mitigations, policy makers are then empowered to make more informed and effective decisions, just as in the case of HiDALGO’s Covid-19 modelling.

What does MAterials design at the eXascale have to do with climate change? Among other things, MaX is dramatically speeding up the search for materials that make more efficient, safer, and smaller lithium ion batteries: a field of study that has had little success despite decades of searching. The otherwise human intensive process of finding new candidate materials moves exponentially faster when conducted computationally on HPC systems. Using HPC also ensures that the human researchers can focus their experiments on only the most promising material candidates.

Continuing with the theme of materials discovery, did you know that it is possible to “capture” CO2 from the atmosphere? We already have the technology to take this greenhouse gas out of our air and put it back into materials that keep it from further warming the planet. These materials could even be a new source of fuel almost like a man-made, renewable oil. The reason this isn’t yet part of the solution to climate change is that it is too slow. In answer, the Novel Materials Discovery Centre of Excellence (NoMaD CoE) is working on finding catalysts to speed up the process of carbon capture. Their recent success story about a publication in Nature discusses how they have used HPC and AI to identify the “genes” of materials that could make efficient carbon-capture catalysts. In our race against the limited amount of time we have to prevent the worst impacts of climate change, the kind of HPC facilitated efficiency boost experienced by MaX and NoMaD could be critical.

Once one considers the need of efficiency, it starts to become clear what the Centre of Excellence for engineering applications EXCELLERAT might be able to offer. Like all of the EU HPC CoEs, EXCELLERAT is working to prepare software to run on the next generation of supercomputers. This preparation is vital because the computers will use a mixture of processor types and be organized in a variety of architectures. Although this variety makes the machines themselves more flexible and powerful, it also demands increased flexibility from the software that runs on them. For example, the software will need the ability to dynamically change how work is distributed among processors depending on what kind and how many a specific supercomputer has. Without this ability, the software will run at the same speed no matter how big, fast, or powerful the computer is: as if it only knows how to work with a team of 5 despite having a team of 20. Hence, EXCELLERAT is preparing engineering simulation software to adapt to working efficiently on any given machine. This kind of simulation software is making it possible to more rapidly design new airplanes for characteristics like a shape that has less drag/better fuel efficiency, less sound pollution, and easier recycling of materials when the plane is too old to use.

Another CoE using HPC efficiency to make our world more sustainable is the Centre of Excellence for Combustion (CoEC). Focused exclusively on combustion simulation, they are working to discover new non-carbon or low-carbon fuels and more sustainable ways of burning them. Until now, the primary barrier to this kind of research has been the computing limitations of HPC systems, which could not support realistically detailed simulations. Only with the capacity of the latest and future machines will researchers finally be able to run simulations accurate enough for practical advances.

Outside of the pursuit for more sustainable combustion, the Energy Oriented Centre of Excellence (EoCoE) is boosting the efficiency of entirely different energy sources. In the realm of Wind for Energy, their simulations designed for the latest HPC systems have boosted the size of simulated wind farms from 5 to 40 square kilometres, which allows researchers and industry to far better understand the impact of land terrain and wind turbine placement. They are also working outside of established wind energy technology to help design an entirely new kind of wind turbine.

In work also related to solar energy, the EoCoE Materials for Energy group is finding new materials to improve the efficiency of solar cells as well as separately working on materials to harvest energy from the mixture of salt and fresh water in estuaries. Meanwhile, the Water for Energy group is improving the modelling of ground water movement to enable more efficient positioning of geothermal wells and the Fusion for Energy group is working to improve the accuracy of models to predict fusion energy output.

EoCoE is also developing simulations to support Meteorology for Energy including the ability to predict wind and solar power capacity in Europe. Unlike our normal daily forecast, energy forecasts need to calculate the impact of fog or cloud thickness on solar cells and wind fluctuations caused by extreme temperature shifts or storms on wind turbines. Without this more advanced form of weather forecasting, it is unfeasible for these renewable but variable energy sources to make up a large amount of the power supplied to our fluctuation sensitive grids. Before we are able to rely on wind and solar power, it will be essential to predict renewable energy output in time to make changes or supplement with alternate energy sources, especially in light of the previously mentioned increase in extreme weather events.

Suffice it to say that climate change poses a variety of enormous challenges. The above describes only some of the work EU HPC CoEs are already doing and none of what they may be able to do in the future! For instance, HiDALGO also has a migration modelling program currently designed to help policy makers divert resources most effectively to migrations caused by conflict. However, similar principles could theoretically be employed in combination with weather modelling like that done by ESiWACE to create a climate migration model. Where expertise meets collaboration, the possibilities are endless! Make sure to follow the links above and our social media handles below to stay up to date on EU HPC CoE activities.

FocusCoE at EuroHPC Summit Week 2022

With the support of the FocusCoE project, almost all European HPC Centres of Excellence (CoEs) participated once again in the EuroHPC Summit Week (EHPCSW) this year in Paris, France: the first EHPCSW in person since 2019’s event in Poland. Hosted by the French HPC agency Grand équipement national de calcul intensif (GENCI), the conference was organised by Partnership for Advanced Computing in Europe (PRACE), the European Technology Platform for High-Performance Computing (ETP4HPC), The EuroHPC Joint Undertaking (EuroHPC JU), and the European Commission (EC).As usual, this year’s event gathered the main European HPC stakeholders from technology suppliers and HPC infrastructures to scientific and industrial HPC users in Europe.

At the workshop on the European HPC ecosystem on Tuesday 22 March at 14:45, where the diversity of the ecosystem was presented around the Infrastructure, Applications, and Technology pillars, project coordinator Dr. Guy Lonsdale from Scapos talked about FocusCoE and the CoEs’ common goal.

Later that day from 16:30 until 18:00h, the FocusCoE project hosted a session titled “European HPC CoEs: perspectives for a healthy HPC application eco-system and Exascale” involving most of the EU CoEs. The session discussed the key role of CoEs in the EuroHPC application pillar, focussing on their impact for building a vibrant, healthy HPC application eco-system and on perspectives for Exascale applications. As described by Dr. Andreas Wierse on behalf of EXCELLERAT, “The development is continuous. To prepare companies to make good use of this technology, it’s important to start early. Our task is to ensure continuity from using small systems up to the Exascale, regardless of whether the user comes from a big company or from an SME”.

Keen interest in the agenda was also demonstrated by attendees from HPC related academia and industry filling the hall to standing room only. In light of the call for new EU HPC Centres of Excellence and the increasing return to in-person events like EHPCSW, the high interest in preparing the EU for Exascale has a bright future.

FocusCoE Hosts Intel OneAPI Workshop for the EU HPC CoEs

On March 2, 2022 FocusCoE hosted Intel for a workshop introducing the oneAPI development environment. In all, over 40 researchers representing the EU HPC Centres of Excellence (CoEs)were able to attend the single day workshop to gain an overview of OneAPI. The 8 presenters from Intel gave presentations through the day covering the OneAPI vision, design, toolkits, a use case with GROMACS (which is already used by some of the EU HPC CoEs), and specific tools for migration and debugging.

Launched in 2019, the Intel OneAPI cross-industry, open, standards-based unified programming model is being designed to deliver a common developer experience across accelerator architectures. With the time saved designing for specific accelerators, OneAPI is intended to enable faster application performance, more productivity, and greater innovation. As summarized on Intel’s OneAPI website, “Apply your skills to the next innovation, and not to rewriting software for the next hardware platform.” Given the work that EU HPC CoEs are currently doing to optimise codes for Exascale HPC systems, any tools that make this process faster and more efficient can only boost CoEs capacity for innovation and preparedness for future heterogeneous systems.

The OneAPI industry initiative is also encouraging collaboration on the oneAPI specification and compatible oneAPI implementations. To that end, Intel is investing time and expertise into events like this workshop to give researchers the knowledge they need not only to use but help improve OneAPI. The presenters then also make themselves available after the workshop to answer questions from attendees on an ongoing basis. Throughout our event, participants were continuously able to ask questions and get real-time answers as well as offers for further support from software architects, technical consulting engineers, and the researcher who presented a use case. Lastly, the full video and slides from presentations are available below for any CoEs who were unable to attend or would like a second look at the detailed presentations.

CoEs at Teratec Forum 2021 and ISC21

With the support of FocusCoE, a number of HPC CoEs will give short presentations at the virtual PRACE booth in the following two HPC-related events: Teratec Forum 2021 and ISC2021 that will take place towards the end of this month. See the schedule below for more details. Please reserve the slots in your calendars, registration details will be provided on the PRACE website soon!

“We are happy to see that FocusCoE was able to help the HPC CoEs to have a significant presence at this year’s editions of ISC and Teratec Forum, two major HPC events, enabled through our good synergies with PRACE”, says Guy Lonsdale, FocusCoE coordinator.

Teratec Forum 2021 schedule

Date / Event | Time slot CEST | Title | Speaker | Organisation |

Tue 22 June | 11:00 – 11:15 | EoCoE-II: Towards exascale for Energy | Edouard Audit, EoCoE-II coordinator | CEA (France) |

| 14:30 – 14:45 | POP CoE: Free Performance Assessments for the HPC Community | Bernd Mohr | Jülich Supercomputing Centre |

Thu 24 June | 13:45 – 14:00 | EXCELLERAT – paving the way for the evolution towards Exascale | Amgad Dessoky / Sophia Honisch | HLRS |

ISC 2021 schedule

Date / Event | Time slot CEST | Title | Speaker | Organisation |

Thu 24 June | 13:45 – 14:00 | EXCELLERAT – paving the way for the evolution towards Exascale | Amgad Dessoky / Sophia Honisch | HLRS |

Fri 25 June | 11:00 – 11:15 | The Center of Excellence for Exascale in Solid Earth (ChEESE) | Alice-Agnes Gabriel | Geophysik, University of Munich |

| 15:30 – 15:45 | EoCoE-II: Towards exascale for Energy | Edouard Audit, EoCoE-II coordinator | CEA (France) |

Tue 29 June | 11:00 – 11:15 | Towards a maximum utilization of synergies of HPC Competences in Europe | Bastian Koller, HLRS | HLRS |

Wed 30 June | 10:45 -11:00 | CoE | Dr.-Ing. Andreas Lintermann | Jülich Supercomputing Centre, Forschungszentrum Jülich GmbH |

Thu 1 July | 11:00 -11:15 | POP CoE: Free Performance Assessments for the HPC Community | Bernd Mohr | Jülich Supercomputing Centre |

| 14:30 -14:45 | TREX: an innovative view of HPC usage applied to Quantum Monte Carlo simulations | Anthony Scemama (1), William Jalby (2), Cedric Valensi (2), Pablo de Oliveira Castro (2) | (1) Laboratoire de Chimie et Physique Quantiques, CNRS-Université Paul Sabatier, Toulouse, France (2) Université de Versailles St-Quentin-en-Yvelines, Université Paris Saclay, France |

Please register to the short presentations through the PRACE event pages here:

| PRACE Virtual booth at Teratec Forum 2021 | PRACE Virtual booth at ISC2021 |

| prace-ri.eu/event/teratec-forum-2021/ | prace-ri.eu/event/praceisc-2021/ |

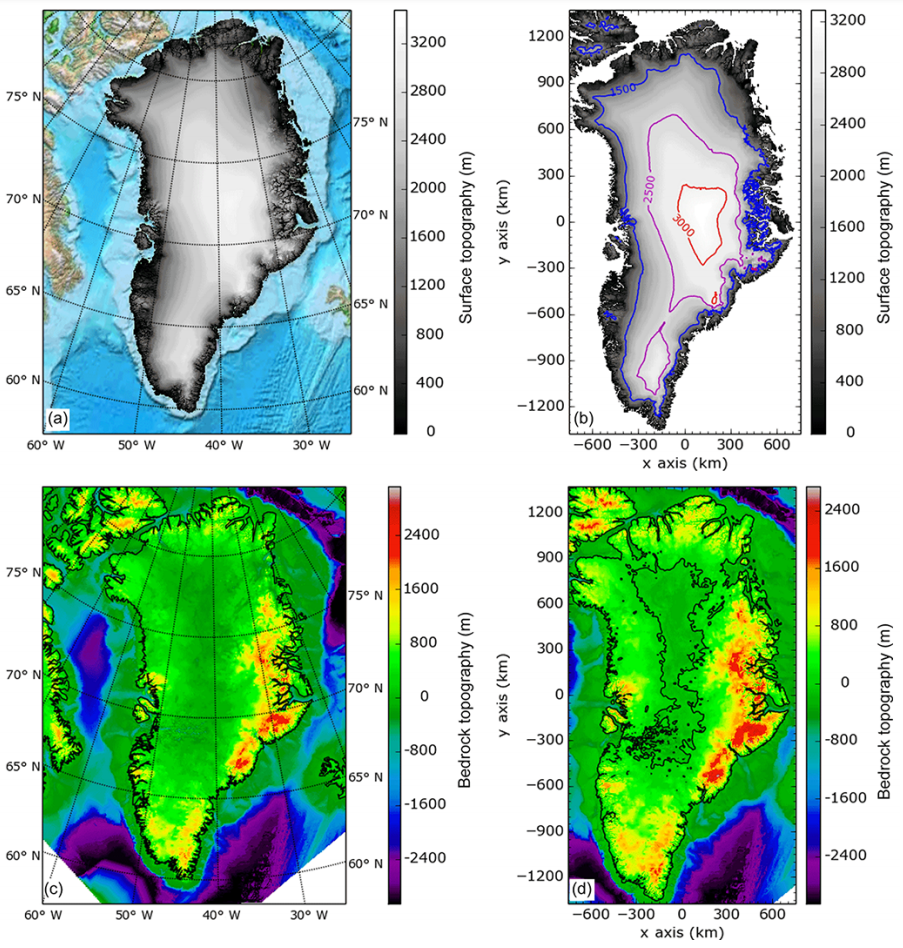

OBLIMAP ice sheet model coupler parallelization and optimization

Short description

Results & Achievements

Objectives

Collaborating Institutions

KNMI

Atos

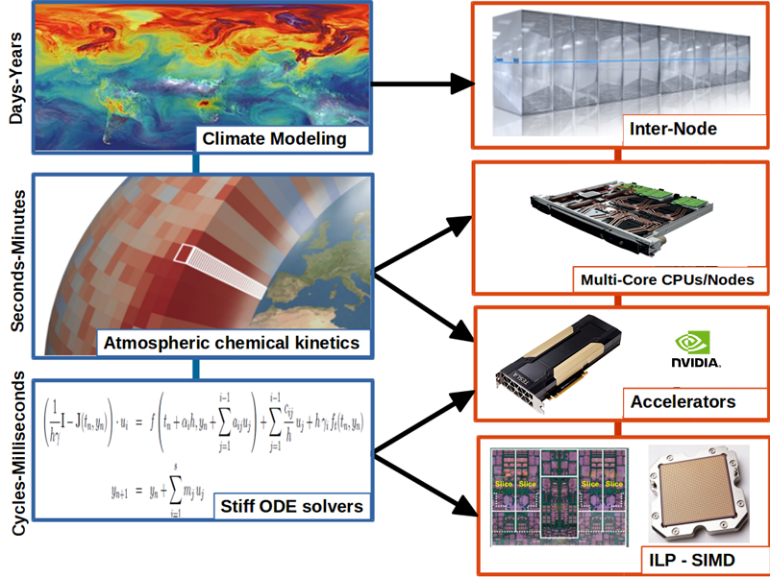

GPU Optimizations for Atmospheric Chemical Kinetics

Short description

Results & Achievements

Objectives

Technologies

EMAC (ECHAM/MESSy) code

CUDA

MPI

NVIDIA GPU accelerator

Atos BullSequana XH2000 supercomputer

List of innovations by the CoEs, spotted by the EU innovation radar

| Title: Biobb, biomolecular modelling building Blocks |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF MANCHESTER – UNITED KINGDOM |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| FUNDACIO INSTITUT DE RECERCA BIOMEDICA (IRB BARCELONA) – SPAIN |

| Title: GROMACS, a versatile package to perform molecular dynamics |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| Title: HADDOCK, a versatile information-driven flexible docking approach for the modelling of biomolecular complexes |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| UNIVERSITEIT UTRECHT – NETHERLANDS |

| Title: PMX, a versatile (bio-) molecular structure manipulation package |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| Title: Faster Than Real Time (FTRT) environment for high-resolution simulations of earthquake generated tsunamis |

| Market maturity: Tech ready |

| Market creation potential: High |

| Project: ChEESE |

| Innovation Topic: Excellent Science |

| UNIVERSIDAD DE MALAGA – SPAIN |

| TECHNISCHE UNIVERSITAET MUENCHEN – GERMANY |

| ISTITUTO NAZIONALE DI GEOFISICA E VULCANOLOGIA – ITALY |

| Title: Probabilistic Seismic Hazard Assessment (PSHA) |

| Market maturity: Exploring |

| Project: ChEESE |

| Innovation Topic: Excellent Science |

| LUDWIG-MAXIMILIANS-UNIVERSITAET MUENCHEN – GERMANY |

| TECHNISCHE UNIVERSITAET MUENCHEN – GERMANY |

| ISTITUTO NAZIONALE DI GEOFISICA E VULCANOLOGIA – ITALY |

| Title: Urgent Computing services for the impact assessment in the immediate aftermath of an earthquake |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: ChEESE |

| Innovation Topic: Excellent Science |

| EIDGENOESSISCHE TECHNISCHE HOCHSCHULE ZUERICH – SWITZERLAND |

| BULL SAS – FRANCE |

| Title: Alya, HemeLB, HemoCell, OpenBF, Palabos-Vertebroplasty simulator, Palabos – Flow Diverter Simulator, BAC, HTMD, Playmolecule, Virtual Assay, CT2S, Insigneo Bone Tissue Suit |

| Market maturity: Exploring |

| Project: CompBioMed |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| UNIVERSITY COLLEGE LONDON – UNITED KINGDOM |

| ACELLERA LABS SL – SPAIN |

| Title: E-CAM software repository |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE – SWITZERLAND |

| UNIVERSITY COLLEGE DUBLIN, NATIONAL UNIVERSITY OF IRELAND, DUBLIN – IRELAND |

| UNITED KINGDOM RESEARCH AND INNOVATION – UNITED KINGDOM |

| Title: E-CAM Training offer on effective use of HPC simulations in quantum chemistry |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE – SWITZERLAND |

| UNIVERSITY COLLEGE DUBLIN, NATIONAL UNIVERSITY OF IRELAND, DUBLIN – IRELAND |

| FREIE UNIVERSITAET BERLIN – GERMANY |

| Title: Improved Simulation Software Packages for Molecular Dynamics |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| SCIENCE AND TECHNOLOGY FACILITIES COUNCIL – UNITED KINGDOM |

| TECHNISCHE UNIVERSITAET WIEN – AUSTRIA |

| UNIVERSITEIT VAN AMSTERDAM – NETHERLANDS |

| Title: Improved software modules for Meso– and multi–scale modelling |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| FREIE UNIVERSITAET BERLIN – GERMANY |

| Title: Code auditing, optimization and performance assessment services for energy-oriented HPC simulations |

| Market maturity: Exploring |

| Project: EoCoE |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMANY |

| Title: Consultancy Services for using HPC Simulations for Energy related applications |

| Market maturity: Exploring |

| Project: EoCoE |

| Innovation Topic: Excellent Science |

| COMMISSARIAT A L ENERGIE ATOMIQUE ET AUX ENERGIES ALTERNATIVES – FRANCE |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMANY |

| Table: New coupled earth system model |

| Market maturity: Tech Ready |

| Project: ESiWACE |

| Innovation Topic: Excellent Science |

| BULL SAS – FRANCE |

| MET OFFICE – UNITED KINGDOM |

| EUROPEAN CENTRE FOR MEDIUM-RANGE WEATHER FORECASTS – UNITED KINGDOM |

| Title:AMR capability and Accelerated Computing in AVBP code |

| Market maturity: Exploring |

| Project: EXCELLERAT |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| CERFACS CENTRE EUROPEEN DE RECHERCHE ET DE FORMATION AVANCEE EN CALCUL SCIENTIFIQUE SOCIETE CIVILE – FRANCE |

| Title: AMR capability in Alya code to enable advanced simulation services for engineering |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: Data Exchange & Workflow Portal: secure, fast and traceable online data transfer between data generators and HPC centers |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: FPGA Acceleration for Exascale Applications based on ALYA and AVBP codes |

| Market maturity: Tech Ready |

| Market creation potential: Noteworthy |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: Open source mode decomposition toolkit for exascale data analysis |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| RHEINISCH-WESTFAELISCHE TECHNISCHE HOCHSCHULE AACHEN – GERMANY |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| SSC SERVICES GMBH – GERMANY |

| Title:Use of GASPI to improve performance of CODA |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: GPU acceleration in TPLS to exploit the GPU-based architectures for Exascale |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| Title: In-Situ Analysis of CFD Simulations |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMAN |

| Title: Interactive in situ visualization in VR |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| UNIVERSITY OF STUTTGART – GERMANY |

| Title: Machine Learning Methods for Computational Fluid Dynamics (CFD) Data |

| Market maturity: Tech Ready |

| Market creation potential: Noteworthy |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMAN |

| Title: Parallel I/O in TPLS using PETSc library |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| RHEINISCH-WESTFAELISCHE TECHNISCHE HOCHSCHULE AACHEN – GERMANY |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| Title: Highly scalable Material Science Simulation Codes |

| Market maturity: Exploring |

| Project: MaX |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| E4 COMPUTER ENGINEERING SPA – ITALY |

| Title: Quantum Simulation as a Service |

| Market maturity: Exploring |

| Market creation potential: Noteworthy |

| Project: MaX |

| Innovation Topic: Excellent Science |

| EIDGENOESSISCHE TECHNISCHE HOCHSCHULE ZUERICH – SWITZERLAND |

| CINECA CONSORZIO INTERUNIVERSITARIO – ITALY |

| Title: Simulation Code Optimisation and Scaling |

| Market maturity: Exploring |

| Project: MaX |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| EIDGENOESSISCHE TECHNISCHE HOCHSCHULE ZUERICH – SWITZERLAND |

| Title: Novel Materials Discovery (NOMAD) Repository |

| Market maturity: Business Ready |

| Project: NoMaD |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| HUMBOLDT-UNIVERSITAET ZU BERLIN – GERMANY |

| BAYERISCHE AKADEMIE DER WISSENSCHAFTEN – GERMANY |

| Title: NOMAD Encyclopedia Service: allows users to see, compare, explore, and understand computed materials data |

| Market maturity: Tech Ready |

| Project: NoMaD |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| HUMBOLDT-UNIVERSITAET ZU BERLIN – GERMANY |

| Title: An ICT platform prototype for systematically tracing public sentiment, its evolution towards a policy, its components and arguments linked to them. |

| Market maturity: Tech Ready |

| Project: NOMAD |

| Innovation Topic: Smart & Sustainable Society |

| ATHENS TECHNOLOGY CENTER ANONYMI BIOMICHANIKI EMPORIKI KAI TECHNIKI ETAIREIA EFARMOGON YPSILIS TECHNOLOGIAS – GREECE |

| NATIONAL CENTER FOR SCIENTIFIC RESEARCH “DEMOKRITOS” – GREECE |

| CRITICAL PUBLICS LTD – UNITED KINGDOM |

| Title: Customer-Specific Performance Analysis for Parallel Codes |

| Market maturity: Exploring |

| Project: POP |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| UNIVERSITAET STUTTGART – GERMANY |

| Title: Repository of powerful tools (codes, pattern/best-practice descriptions, experimental results) for the HPC community |

| Market maturity: Exploring |

| Project: POP |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| TERATEC – FRANCE |

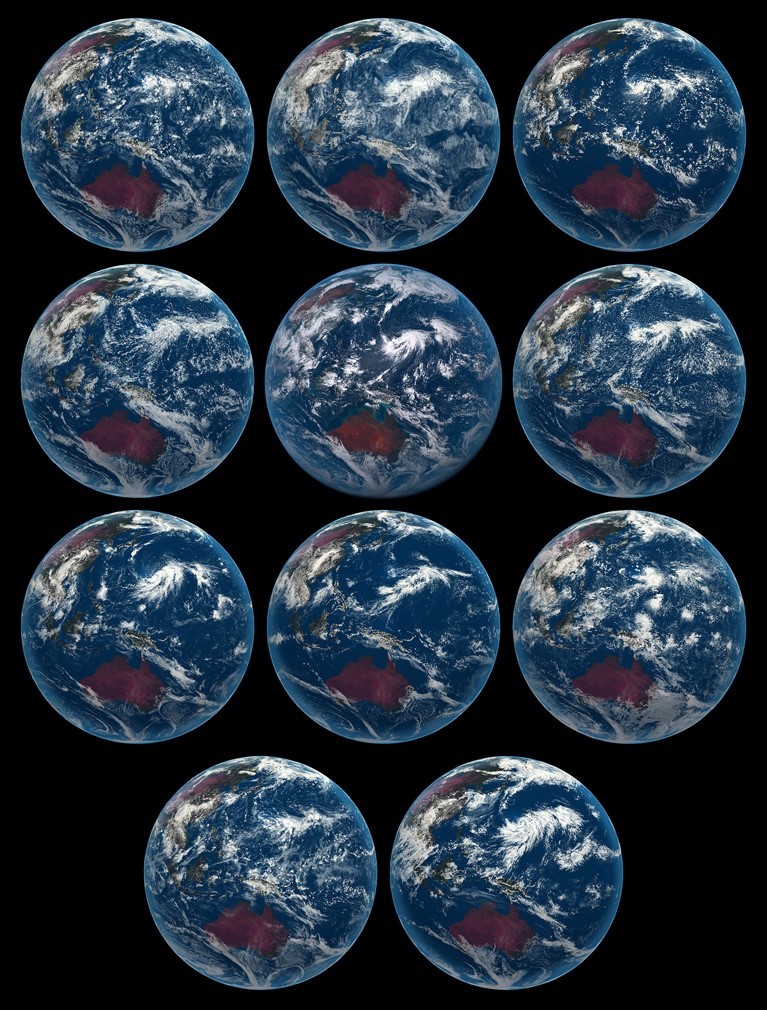

DYAMOND intercomparison project for storm-resolving global weather and climate models

Short description

Results & Achievements

Objectives

Technologies

CDO, ParaView, jupyter, server-side processing

Collaborating Institutions

DKRZ, MPI-M (and many others)

Optimization of Earth System Models on the path to the new generation of Exascale high-performance computing systems

A Use Case by

Short description

Results & Achievements

Objectives

Use Case Owner

Barcelona Supercomputing Center-Centro Nacional de Supercomputación (BSC-CNS)

Mario Acosta: mario.acosta@bsc.es

Kim Serradell: kim.serradell@bsc.es

ESiWACE Newsletter 01/2021 published