- Homepage

- >

- Search Page

- > Success Stories

- >

POP and PerMed Centers of Excellence are Getting Cell-Level Simulations Ready for Exascale

Success story # Highlights:

- Industry sector: Computational biology

- Key codes used: PhysiCell

- Keywords:

- Memory management

- Cell-cell interaction simulation

- OpenMP

- Good practice

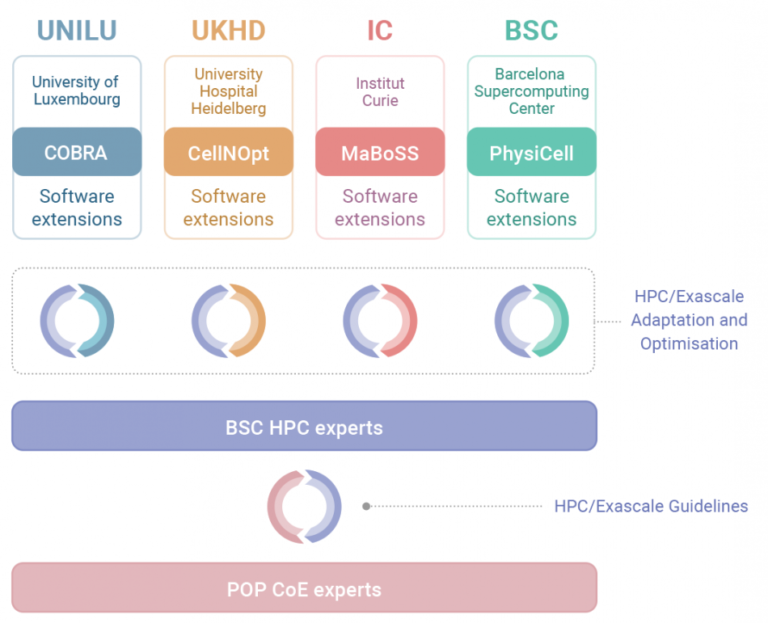

Organisations & Codes Involved:

Technical Challenge:

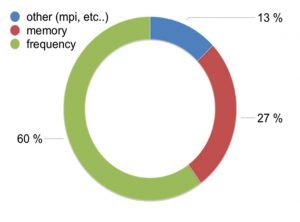

One of PerMedCoE’s use cases is the study of tumour evolution based on single-cell omics and imaging using PhysiCell. In order to simulate such large-scale problems that replicate real-world tumours, High Performance Computing (HPC) is essential. However, memory usage presents a challenge in HPC architectures and is one of the obstacles to optimizing simulations for running at Exascale.

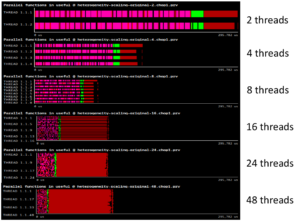

With a high number of threads computing in parallel, performance can be degraded because of concurrent memory allocations and deallocations. We observe that as the number of threads goes up, runtime is not reduced as expected. This is not a memory-bound problem, but a memory management one.

Solution:

Following the POP methodology and using the BSC tools (Extrae, Paraver, and BasicAnalysis), we determine that an overloading of a specific implementation of C++ operators is causing a high number of concurrent memory allocations and deallocations, causing the memory management library to perform synchronization and “steal” cycles of CPU from the running application.

The proposal from POP is to avoid the overloading of operators that need allocation and deallocation of data structures. However, achieving this implies a major code change. To demonstrate the potential of the suggestion without doing the major code change, we suggest using an external library to improve the memory management: Jemalloc.

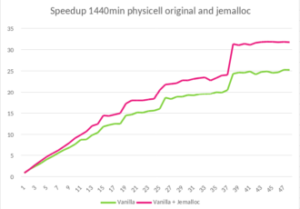

Jemalloc is “a general purpose malloc implementation that emphasizes fragmentation avoidance and scalable concurrency support” [1]. It can be integrated easily into any code by preloading the library, with LD_PRELOAD in Linux for example. After applying this solution to PhysiCell, we execute the same experiment and obtain a 1.45x speed up when using 48 OpenMP threads.

[1] cited from Jemalloc website. http://jemalloc.net

Business impact:

As the only transversal CoE, one of POP’s objectives is to advise and help the other HPC CoEs prepare their codes for the Exascale. This collaboration between POP and PerMed is a good example of the potential of these kinds of partnerships between CoEs.

POP provides the performance analysis expertise and tools, the experience of hundreds of codes analysed, and the best practices gathered from those analyses. PerMedCoE bring state of the art use cases and real-world problems to be solved. Together, they improve the performance and efficiency of cell-level agent-based simulation software: in this case PhysiCell.

One of the goals of PerMedCoE is the scaling-up of four different tools that address different types of simulations in personalized medicine and that were coded in different languages (C++, R, python, julia). These tools are being re-factored to scale up to Exascale. In this scaling-up, audits such as the ones POP can offer are essential to evaluate past developments and guide future ones.

Currently, only one of PerMed’s tools is able to use several nodes to run a single simulation, whereas the rest of the tools can only use all of the processors of a single node. Our scaling-up strategy is a heterogeneous one. We are implementing MPI on some tools, re-factoring others to other languages that can ease the use of HPC clusters such as Julia, or even targeting “many-task computing“ paradigms like in the case of model fitting.

The main motivation to have HPC versions of these simulation tools is to be able to simulate bigger, more complex cell-level agent-based models. Current models can obtain up to 10^6 cells, but it has been proven that most of the problems addressed in computational biology (cancerogenesis, cell lines growth, COVID-19 infection) usually target from 10^9 to 10^12 cells. In addition, most of these current simulations consider an over-simplified environment that is nowhere close to a real-life scenario. We aim to have complex multi-scale simulation frameworks that target these bigger, more complex simulations.

Benefits:

- Speedup of 1.45x on runtime with 48 threads in PhysiCell execution

- A good practice for High Performance C++ applications exported

- This behaviour in memory management systems will be detected more easily in future analysis

Excellerat Success Story: Bringing industrial end-users to Exascale computing: An industrial level combustion design tool on 128K cores

CoE involved:

Success story # Highlights:

- Exascale

- Industry sector: Aerospace

- Key codes used: AVBP

Organisations & Codes Involved:

CERFACS is a theoretical and applied research centre, specialised in modelling and numerical simulation. Through its facilities and expertise in High Performance Computing (HPC), CERFACS deals with major scientific and technical research problems of public and industrial interest.

GENCI (Grand Equipement National du Calcul Intensif) is the French High-Performance Computing (HPC) National Agency in charge of promoting and acquiring HPC, Artificial Intelligence (AI) capabilities, and massive data storage resources.

Cellule de Veille technologique GENCI (CVT) is an interest group focused on technology watch in High Performance Computing (HPC) pulling together French public research, CEA, INRIA among Others. It offers first time access to novel architectures and access to technical support towards preparing the codes for the near future of HPC.

AVBP is a parallel Computational Fluid Dynamics (CFD) code that solves the three-dimensional compressible Navier-Stokes on unstructured and hybrid grids. Its main area of application is the modelling of unsteady reacting flows in combustor configurations and turbomachines. The prediction of unsteady turbulent flows is based on the Large Eddy Simulation (LES) approach that has emerged as a prospective technique for problems associated with time dependent phenomena and coherent eddy structures.

CHALLENGE:

Some physical processes like soot formation are so CPU intensive and non deterministic that their predictive modelling is out of reach today, limiting our insights to ad hoc correlations, and preliminary assumptions. Moving these runs to Exascale level will allow simulation longer by orders of magnitudes, achieving the compulsory statistical convergence required for a design tool.

The complexity at the code level to unlock node level and system level performance is challenging and requires code porting, optimisation and algorithm refactoring on various architectures in the way to enable Exascale performance.

SOLUTION:

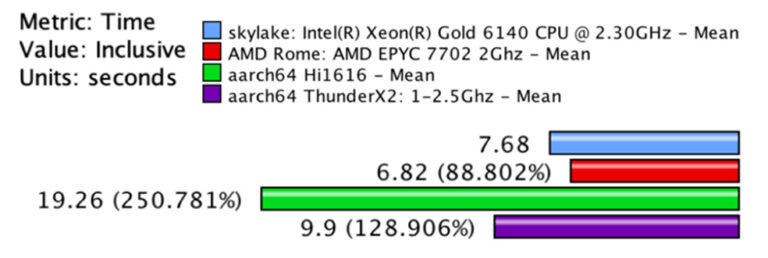

In order to prepare the AVBP code to architectures that were not available at the start of the EXCELLERAT project, CERFACS teamed up with the CVT from GENCI, Arm, AMD and the EPI project to port, benchmark and (when possible) optimise the AVBP code for Arm and AMD architectures. This collaboration ensures an early access to these new architectures and prime information to prepare our codes for the wide spread availability of systems equipped with these processors. The AVBP developers got access to the IRENE AMD system of PRACE at TGCC with support from AMD and Atos, which allowed to characterise the application on this architecture and create a predictive model on how the different features of the processor (frequency, bandwidth) could affect the performance of the code. They were also able to port the code to flavors of Arm processors singling out compiler dependency and identify performance bottlenecks to be investigated before large systems become available in Europe.

Business impact:

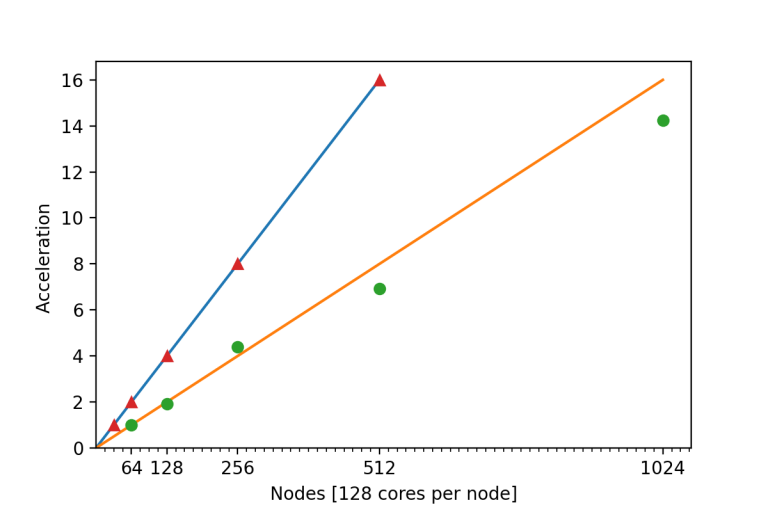

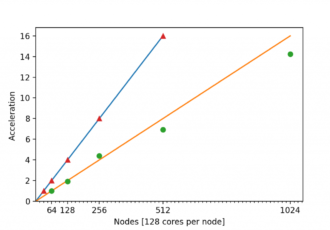

The AVBP code was ported and optimised for the TGCC/GENCI/PRACE system Joliot-CURIE ROME with excellent strong and weak scaling performance up to 128,000 cores and 32,000 cores respectively. These optimisations impacted directly four PRACE projects on this same system on the following call for proposals.

Beside AMD processors, the EPI project and GENCI’s CVT as well as EPCC (EXCELLERAT’s partner) provided access to Arm based clusters respectively based on Marvell ThunderX2 (Inti Prototype hosted and operated by CEA) and Hi1616 (early silicon from Huawei) architectures. This access provided important feedback on code portability using the Arm and gcc compilers, single processor and strong scaling performance up to 3072 cores.

Results from this collaboration have been included on the Arm community blog [1]. A white paper on this collaboration is underway with GENCI and CORIA CNRS.

Benefits for further research:

- Code ready for the wide spread access of the Rome Architecture.

- Strong and weak scaling measurements up to 128,000 cores

- Initial optimisations for Arm architectures

Excellerat Success Story: Enabling High Performance Computing for Industry through a Data Exchange & Workflow Portal

CoE involved:

Success story # Highlights:

- Keywords:

- Data Transfer

- Data Management

- Data Reduction

- Automatisation, Simplification

- Dynamic Load Balancing

- Dynamic Mode Decomposition

- Data Analytics

- combustor design

- Industry sector: Aeronautics

- Key codes used: Alya

Organisations & Codes Involved:

As an IT service provider, SSC-Services GmbH develops individual concepts for cooperation between companies and customer-oriented solutions for all aspects of digital transformation. Since 1998, the company, based in Böblingen (Germany), has been offering solutions for the technical connection and cooperation of large companies and their suppliers or development partners. The corporate roots lie in the management and exchange of data of all types and sizes.

RWTH Aachen University is the largest technical university of Germany. The Institute of Aerodynamics of RWTH Aachen University possesses extensive expertise in the simulation of turbulent flows, aeroacoustics and high-performance computing. For more than 18 years large-eddy simulations with an advanced analysis of the large scale simulation data are successfully performed for various applications.

Barcelona Supercomputing Center (BSC) is the national supercomputing centre in Spain. BSC specialises in High Performance Computing (HPC) and manages MareNostrum IV, one of the most powerful supercomputers in Europe. BSC is at the service of the international scientific community and of industry that requires HPC resources. The Computing Applications for Science and Engineering (CASE) Department from BSC is involved in this application providing the application case and the simulation code for this demonstrator.

The code used for this application is the high performance computational mechanics code Alya from BSC designed to solve complex coupled multi-physics / multi-scale / multi-domain problems from the engineering realm. Alya was specially designed for massively parallel supercomputers, and the parallelization embraces four levels of the computer hierarchy. 1) A substructuring technique with MPI as the message passing library is used for distributed memory supercomputers. 2) At the node level, both loop and task parallelisms are considered using OpenMP as an alternative to MPI. Dynamic load balance techniques have been introduced as well to better exploit computational resources at the node level. 3) At the CPU level, some kernels are also designed to enable vectorization. 4) Finally, accelerators like GPU are also exploited through OpenACC pragmas or with CUDA to further enhance the performance of the code on heterogeneous computers. Alya is one of the only two CFD codes of the Unified European Applications Benchmark Suite (UEBAS) as well as the Accelerator benchmark suite of PRACE.

Technical Challenge:

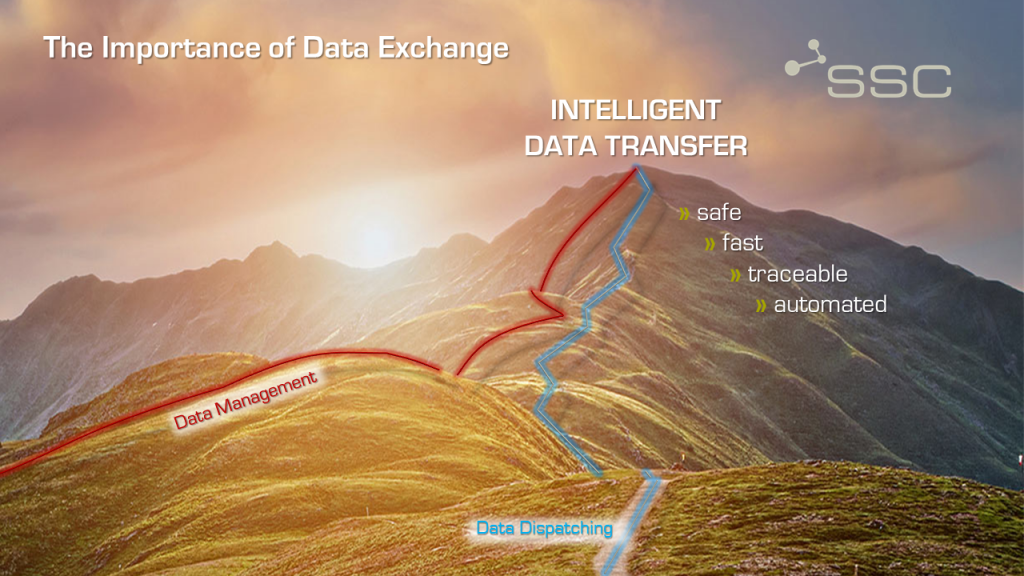

SSC is developing a secure data exchange and transfer platform as part of the EXCELLERAT project to facilitate the use of high-performance computing (HPC) for industry and to make data transfer more efficient. Today, organisations and smaller industry partners face various problems in dealing with HPC calculations, HPC in general, or even access to HPC resources. In many cases, the calculations are complex and the potential users do not have the necessary expertise to fully exploit HPC technologies without support. The developed data platform will be able to simplify or, at best, eliminate these obstacles.

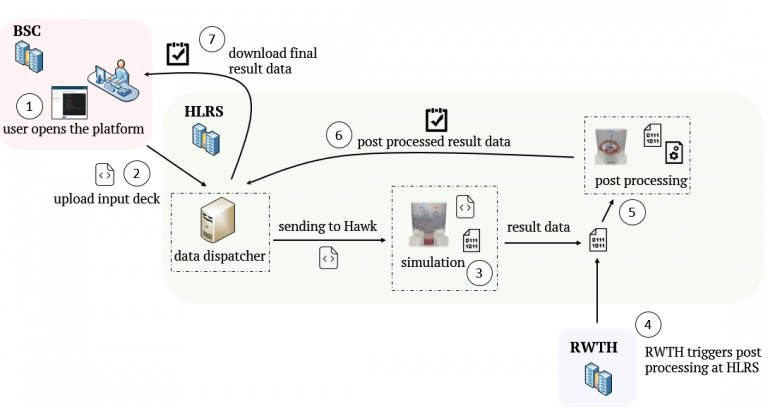

The data roundtrip starts with a user at the Barcelona Supercomputing Center that wants to simulate the Alyafiles. Therefore, the user uploads the corresponding files through the data exchange and workflow platform and selects Hawk at HLRS as a simulation target. After the files have been simulated, RWTH Aachen starts the post-processing process at HLRS. In the end the user downloads the post processed data through the platform.

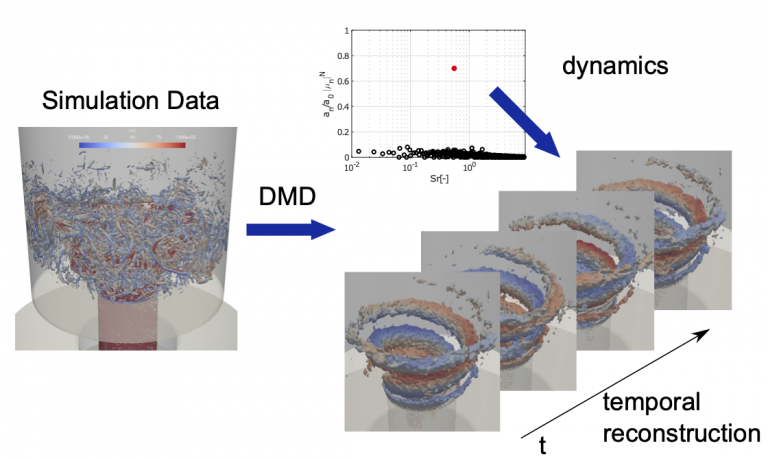

With the increasing availability of computational resources, high-resolution numerical simulations have become an indispensable tool in fundamental academic research as well as engineering product design. A key aspect of the engineering workflow is the reliable and efficient analysis of the rapidly growing high-fidelity flow field data. RWTH develops a modal decomposition toolkit to extract the energetically and dynamically important features, or modes, from the high-dimensional simulation data generated by the code Alya. These modes enable a physical interpretation of the flow in terms of spatio-temporal coherent structures, which are responsible for the bulk of energy and momentum transfer in the flow. Modal decomposition techniques can be used not only for diagnostic purposes, i.e. to extract dominant coherent structures, but also to create a reduced dynamic model with only a small number of degrees of freedom that approximates and anticipates the flow field. The modal decomposition will be executed on the same architecture as the main simulation. Besides providing better physical insights, this will reduce the amount of data that needs to be transferred back to the user.

Scientific Challenge:

Highly accurate, turbulence scale resolving simulations, i.e. large eddy simulations and direct numerical simulations, have become indispensable for scientific and industrial applications. Due to the multi-scale character of the flow field with locally mixed periodic and stochastic flow features, the identification of coherent flow phenomena leading to an excitation of, e.g., structural modes is not straightforward. A sophisticated approach to detect dynamic phenomena in the simulation data is a reduced-order analysis based on dynamic mode decomposition (DMD) or proper orthogonal decomposition (POD).

In this collaborative framework, DMD is used to study unsteady effects and flow dynamics of a swirl-stabilised combustor from high-fidelity large-eddy simulations. The burner consists of plenum, fuel injection, mixing tube and combustion chamber. Air is distributed from the plenum into a radial swirler and an axial jet through a hollow cone. Fuel is injected into a plenum inside the burner through two ports that contain 16 injection holes of 1.6 mm diameter located on the annular ring between the cone and the swirler inlets. The fuel injected from the small holes at high velocity is mixed with the swirled air and the axial jet along a mixing tube of 60 mm length with a diameter of D = 34 mm. At the end of the mixing tube, the mixture expands over a step change with a diameter ratio of 3.1 into a cylindrical combustion chamber. The burner operates at Reynolds number Re = 75,000 with pre-heated air at T_air = 453 K and hydrogen coming at T_H2 = 320 K. The numerical simulations have been conducted on a hybrid unstructured mesh including prisms, tetrahedrons and pyramids, and locally refined in the regions of interest with a total of 63 million cells.

SOLUTION:

The developed Data Exchange & Workflow Portal will be able to simplify or even eliminate these obstacles. First activities have already started. The new platform enables users to easily access the two HLRS clusters, Hawk and Vulcan, from any authorised device and to run their simulations remotely. The portal provides relevant HPC processes for the end users, such as uploading input decks, scheduling workflows, or running HPC jobs.

In order to be able to perform data analytics, i.e. modal decomposition, of the large amounts of data that arise from Exascale simulations, a modal decomposition toolkit has been developed. An efficient and scalable parallelisation concept based on MPI and LAPACK/ScaLAPACK has been used to perform modal decompositions in parallel on large data volumes. Since DMD and POD are data-driven decomposition techniques, the time resolved data has to be read for the whole time interval to be analysed. To handle the large amount of input and output, the software tool has been optimised to effectively read and write the time resolved snapshot data parallelly in time and space. Since different solution data formats are utilised by the computational fluid dynamics community, a flexible modular interface has been developed to easily add data formats of other simulation codes.

The flow field of the investigated combustor exhibits a self-excited flow oscillation known as a precessing vortex core (PVC) at a dimensionless Strouhal Number of Sr=0.55, which can lead to inefficient fuel consumption, high level of noise and eventually combustion hardware damage. To analyse the dynamics of the PVC, DMD is used to extract the large-scale coherent motion from the turbulent flow field characterised by a manifold of different spatial and temporal scales shown in Figure 2 (left). The instantaneous flow field of the turbulent flame is visualised by an iso-surface of the Q-criterion coloured by the absolute velocity. The DMD analysis is performed on the three-dimensional velocity and pressure field using 2000 snapshots. The resulting spectrum of the DMD, showing the amplitude of each mode as a function of the dimensionless frequency is given in Figure 2 (top). One dominant mode, marked by a red dot, at Sr=0.55 matching the dimensionless frequency of the PVC is clearly visible. The temporal reconstruction of the extracted DMD mode showing the extracted PVC vortex is illustrated in Figure 2 (right). It shows the iso-surface of the Q-criterion coloured by the radial velocity.

Scientific impact of this result:

The Data Exchange & Workflow Portal is a mature solution for providing seamless and secure access to high-performance computing resources by end users. The innovative thing about the solution is that it combines the know-how about secure data exchange with an HPC platform. This is fundamental because the combination of know-how provision and secure data exchange between HPC and SMEs is unique. Especially the web interface is very easy to use and many tasks are automated, which leads to a simplification of the whole HPC complex and there is an easier entry for HPC engineers.

In addition, the data reduction programming technology ensures a more intelligent, faster transfer of files. There will be a highly increased performance speed when transferring the same data sets over and over. If the file is already known by the system and there is no need to transfer it again. Only the changed parts need to be exchanged.

The developed data analytics, i.e. modal decomposition, toolkit provides an efficient and user-friendly way to analyse simulation data and extract energetically and dynamically important features, or modes, from complex, high-dimensional flows. To address a broad user community having different backgrounds and expertise in HPC applications, a server/client structure has been implemented allowing an efficient workflow. Using this structure, the actual modal decomposition is done on the server running in parallel on the HPC cluster which is connected via TCP with the graphical user interface (client) running on the local machine. To efficiently visualise the extracted modes and reconstructed flow fields without writing large amounts of data to disk, the modal decomposition server can be connected to a ParaView server/client configuration via Catalyst enabling in-situ visualisation.

Finally, this demonstrator shows an integrated HPC-based solution that can be used for burner design and optimisation using high-fidelity simulations and data analytics through an interactive workflow portal with an efficient data exchange and data transfer strategy.

Benefits for further research:

- Higher HPC customer retention due to less complex HPC environment

- Reduction of HPC complexity due to web frontend

- Shorter training phases for inexperienced users and reduced support effort for HPC centres

- Calculations can be started from anywhere with a secure connection

- Time and cost savings due to a high degree of automation that streamlines the process chain

- Efficient and user-friendly post-processing/ data analytics

Submit your proposal to the second FF4EuroHPC Open Call to benefit from the use of advanced HPC services

The FF4EuroHPC project started at the beginning of September 2020. As a part of the EuroHPC Joint Undertaking, under the auspices of the European Commission’s Horizon 2020 program, has been awarded a three-year grant of EUR 9.9 million to start a new European consortium, called FF4EuroHPC.

The key concept behind FF4EuroHPC is to demonstrate to European SMEs ways to optimize their performance with the use of advanced HPC (High Performance Computing) services (e.g., modelling & simulation, data analytics, machine-learning and AI, and possibly combinations thereof) and thereby take advantage of these innovative ICT solutions for business benefit.

Two open calls had been organised by the project, with the aim to create two tranches of application experiments within diverse industry sectors. The first Open Call was successful; 68 high-quality submissions involving 202 partners from 25 European countries (plus Canada) were received, and 16 experiments were selected for funding in an evaluation process involving 37 external reviewers.

The open call will target experiments of the highest quality, involving innovative, agile SMEs with work plans built around innovation objectives, arising from the use of advanced HPC services. Experiments present the heart of the project and will be the main outputs of FF4EuroHPC, which is a successor of the Fortissimo and Fortissimo2 projects.

Proposals are sought that address business challenges from European SMEs covering varied application domains, preference being given to engineering and manufacturing, or sectors able to demonstrate fast economic growth or particular economic impact for Europe. Priority will be given to consortia centred on SMEs that are new to the use of advanced HPC services.The second Open Call was opened on 22nd June 2021 and will be closed on 29th September 2021. The indicative total funding budget is € 5 Mio.

>> More information is provided on the project webpage www.ff4eurohpc.eu

CoEs at Teratec Forum 2021 and ISC21

With the support of FocusCoE, a number of HPC CoEs will give short presentations at the virtual PRACE booth in the following two HPC-related events: Teratec Forum 2021 and ISC2021 that will take place towards the end of this month. See the schedule below for more details. Please reserve the slots in your calendars, registration details will be provided on the PRACE website soon!

“We are happy to see that FocusCoE was able to help the HPC CoEs to have a significant presence at this year’s editions of ISC and Teratec Forum, two major HPC events, enabled through our good synergies with PRACE”, says Guy Lonsdale, FocusCoE coordinator.

Teratec Forum 2021 schedule

Date / Event | Time slot CEST | Title | Speaker | Organisation |

Tue 22 June | 11:00 – 11:15 | EoCoE-II: Towards exascale for Energy | Edouard Audit, EoCoE-II coordinator | CEA (France) |

| 14:30 – 14:45 | POP CoE: Free Performance Assessments for the HPC Community | Bernd Mohr | Jülich Supercomputing Centre |

Thu 24 June | 13:45 – 14:00 | EXCELLERAT – paving the way for the evolution towards Exascale | Amgad Dessoky / Sophia Honisch | HLRS |

ISC 2021 schedule

Date / Event | Time slot CEST | Title | Speaker | Organisation |

Thu 24 June | 13:45 – 14:00 | EXCELLERAT – paving the way for the evolution towards Exascale | Amgad Dessoky / Sophia Honisch | HLRS |

Fri 25 June | 11:00 – 11:15 | The Center of Excellence for Exascale in Solid Earth (ChEESE) | Alice-Agnes Gabriel | Geophysik, University of Munich |

| 15:30 – 15:45 | EoCoE-II: Towards exascale for Energy | Edouard Audit, EoCoE-II coordinator | CEA (France) |

Tue 29 June | 11:00 – 11:15 | Towards a maximum utilization of synergies of HPC Competences in Europe | Bastian Koller, HLRS | HLRS |

Wed 30 June | 10:45 -11:00 | CoE | Dr.-Ing. Andreas Lintermann | Jülich Supercomputing Centre, Forschungszentrum Jülich GmbH |

Thu 1 July | 11:00 -11:15 | POP CoE: Free Performance Assessments for the HPC Community | Bernd Mohr | Jülich Supercomputing Centre |

| 14:30 -14:45 | TREX: an innovative view of HPC usage applied to Quantum Monte Carlo simulations | Anthony Scemama (1), William Jalby (2), Cedric Valensi (2), Pablo de Oliveira Castro (2) | (1) Laboratoire de Chimie et Physique Quantiques, CNRS-Université Paul Sabatier, Toulouse, France (2) Université de Versailles St-Quentin-en-Yvelines, Université Paris Saclay, France |

Please register to the short presentations through the PRACE event pages here:

| PRACE Virtual booth at Teratec Forum 2021 | PRACE Virtual booth at ISC2021 |

| prace-ri.eu/event/teratec-forum-2021/ | prace-ri.eu/event/praceisc-2021/ |

GPU Hackathons in Europe 2021

Many of the recently installed as well as the upcoming supercomputers will be GPU-accelerated.

Throughout this year there will be multiple GPU Hackathons taking place in Europe, many of them organized by institutions that are also involved as partners in one or more European Centres of Excellence for High-Performance Computing. For the time being, all of the Hackathons will be virtual:

- Helmholtz GPU Hackathon 2021 in March

- EPCC GPU Hackathon 2021 in April

- IDRIS GPU Hackathon 2021 in May

- CINECA GPU Hackathon in June

- CSCS GPU Hackathon 2021 in September

- ENCCS GPU Hackathon 2021 in December

The hackathons are four day intensive hands-on events designed to help computational scientists port their applications to GPUs using libraries, OpenACC, CUDA and other tools by pairing participants with dedicated mentors experienced in GPU programming and development.

Representing distinguished scholars and pre-eminent institutions around the world, these teams of mentors and attendees work together to realize performance gains and speed-ups by taking advantage of parallel programming on GPUs.

Applications can be submitted at

www.gpuhackathons.org.

Latest CoE News

EoCoE: Performance Portability on HPC Accelerator Architectures with Modern Techniques: The ParFlow Blueprint

Performance Portability on HPC Accelerator Architectures with Modern Techniques: The ParFlow Blueprint A Use Case by Short description Rapidly changing heterogeneous supercomputer architectures pose a great challenge to many scientific

Excellerat: Bringing industrial end-users to Exascale computing: An industrial level combustion design tool on 128K core

Excellerat Success Story: Bringing industrial end-users to Exascale computing: An industrial level combustion design tool on 128K cores CoE involved: Strong scaling for turbulent channel (tri) and rocket engine simulations

Excellerat: Enabling High Performance Computing for Industry through a Data Exchange & Workflow Portal

Excellerat Success Story: Enabling High Performance Computing for Industry through a Data Exchange & Workflow Portal CoE involved: Success story # Highlights: Keywords: Data Transfer Data Management Data Reduction Automatisation,

Submit your proposal to the second FF4EuroHPC Open Call to benefit from the use of advanced HPC services

The FF4EuroHPC project started at the beginning of September 2020. As a part of the EuroHPC Joint Undertaking, under the auspices of the European Commission’s Horizon 2020 program, has been awarded

CoEs at Teratec Forum 2021 and ISC21

With the support of FocusCoE, a number of HPC CoEs will give short presentations at the virtual PRACE booth in the following two HPC-related events: Teratec Forum 2021 and ISC2021

FocusCoE organizes two workshops at EHPCSW21

With the support of the FocusCoE project, a number of the HPC CoE participated in the first online edition of the EuroHPC Summit Week 2021 (EHPCSW21) in the context of

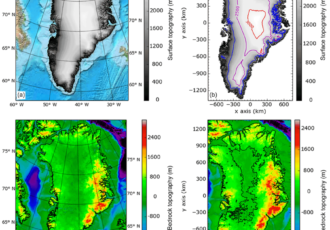

ESiWACE: OBLIMAP ice sheet model coupler parallelization and optimization

The goal of this use case is to reduce the memory footprint of the code by improving its distribution over parallel tasks, and to resolve the I/O bottleneck by implementing parallel reading and writing.

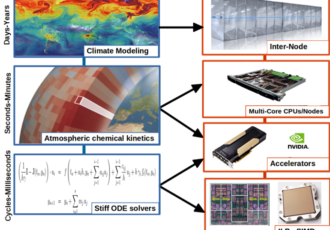

ESiWACE: GPU Optimizations for Atmospheric Chemical Kinetics

The goal of this use case is to reduce the memory footprint of the EMAC code in the GPU device, thereby allowing more MPI tasks to be run concurrently on the same hardware.

List of Innovations by CoEs spotted by Innovation Radar

A list of innovations by the HPC Centres of Excellence, as spotted by the EU innovation radar

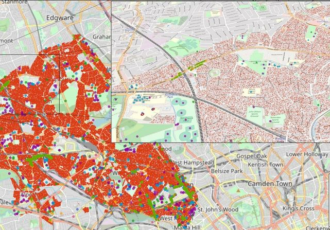

HiDALGO: Assisting decision makers to solve Global Challenges with HPC applications – Covid-19 modelling

The success story describes how HiDALGO supports the UK National Health Service with tools to detect, predict and even prevent the virus spread behaviour in the current pandemic situation.

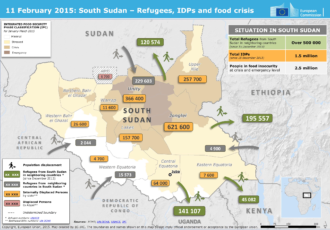

HiDALGO: Assisting decision makers to solve Global Challenges with HPC applications – Migration issues

The success story shows how HiDALGO supports NGOs with providing more accurate estimations on people flows and even destinations to send the appropriate amount of help to the right place.

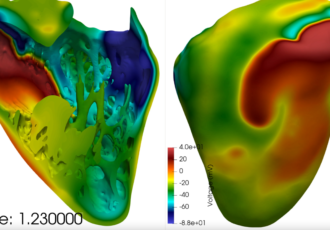

CompBioMed: In silico trials for effects of COVID-19 drugs on heart

The use case assesses the impact of certain COVID-19 drugs on a human heart population

Four HPC CoEs present at the digital Forum Teratec 2020

09 October, 2020

Four HPC CoES (ChEESE, EoCoE, ESiWACE, POP) will be present at the exhibition of the first digital edition of the Forum Teratec 2020 in order to present their results in a two-days virtual exhibition on October 13-14. Do not miss the opportunity to have a look at their virtual booths!

Due to the Covid-19 pandemic, Forum Teratec 2020 goes virtual and offers the possibility to all HPC-related initiatives to present their results online. Also CHEESE, EoCoE, ESIWACE and POP will show their results via the internet.

The Teratec Forum 2020 brings together the best international experts in HPC, Simulation and Big Data for its next edition taking place on 13-14 October 2020. During these two days, a virtual exhibition environment will bring together a large number of technology companies presenting their latest innovations in the fields of Simulation, HPC, Big Data and AI. The digital platform will allow exhibitors and participants to share B2B meeting plans as well as product and service offers presented thanks to powerful communication tools.