Excellerat Success Story: Enabling High Performance Computing for Industry through a Data Exchange & Workflow Portal

CoE involved:

Success story # Highlights:

- Keywords:

- Data Transfer

- Data Management

- Data Reduction

- Automatisation, Simplification

- Dynamic Load Balancing

- Dynamic Mode Decomposition

- Data Analytics

- combustor design

- Industry sector: Aeronautics

- Key codes used: Alya

Organisations & Codes Involved:

As an IT service provider, SSC-Services GmbH develops individual concepts for cooperation between companies and customer-oriented solutions for all aspects of digital transformation. Since 1998, the company, based in Böblingen (Germany), has been offering solutions for the technical connection and cooperation of large companies and their suppliers or development partners. The corporate roots lie in the management and exchange of data of all types and sizes.

RWTH Aachen University is the largest technical university of Germany. The Institute of Aerodynamics of RWTH Aachen University possesses extensive expertise in the simulation of turbulent flows, aeroacoustics and high-performance computing. For more than 18 years large-eddy simulations with an advanced analysis of the large scale simulation data are successfully performed for various applications.

Barcelona Supercomputing Center (BSC) is the national supercomputing centre in Spain. BSC specialises in High Performance Computing (HPC) and manages MareNostrum IV, one of the most powerful supercomputers in Europe. BSC is at the service of the international scientific community and of industry that requires HPC resources. The Computing Applications for Science and Engineering (CASE) Department from BSC is involved in this application providing the application case and the simulation code for this demonstrator.

The code used for this application is the high performance computational mechanics code Alya from BSC designed to solve complex coupled multi-physics / multi-scale / multi-domain problems from the engineering realm. Alya was specially designed for massively parallel supercomputers, and the parallelization embraces four levels of the computer hierarchy. 1) A substructuring technique with MPI as the message passing library is used for distributed memory supercomputers. 2) At the node level, both loop and task parallelisms are considered using OpenMP as an alternative to MPI. Dynamic load balance techniques have been introduced as well to better exploit computational resources at the node level. 3) At the CPU level, some kernels are also designed to enable vectorization. 4) Finally, accelerators like GPU are also exploited through OpenACC pragmas or with CUDA to further enhance the performance of the code on heterogeneous computers. Alya is one of the only two CFD codes of the Unified European Applications Benchmark Suite (UEBAS) as well as the Accelerator benchmark suite of PRACE.

Technical Challenge:

SSC is developing a secure data exchange and transfer platform as part of the EXCELLERAT project to facilitate the use of high-performance computing (HPC) for industry and to make data transfer more efficient. Today, organisations and smaller industry partners face various problems in dealing with HPC calculations, HPC in general, or even access to HPC resources. In many cases, the calculations are complex and the potential users do not have the necessary expertise to fully exploit HPC technologies without support. The developed data platform will be able to simplify or, at best, eliminate these obstacles.

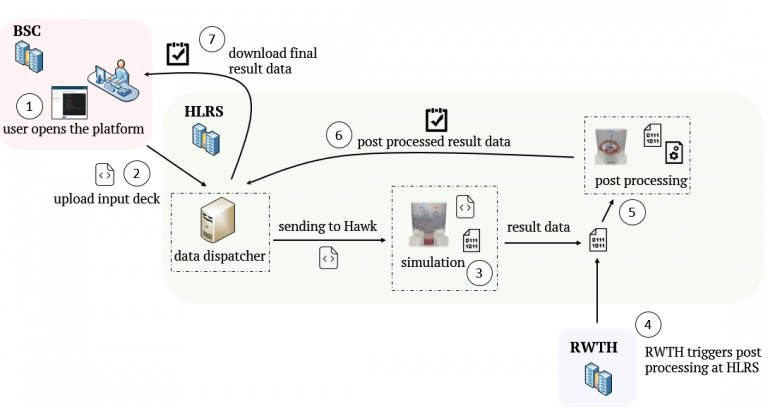

The data roundtrip starts with a user at the Barcelona Supercomputing Center that wants to simulate the Alyafiles. Therefore, the user uploads the corresponding files through the data exchange and workflow platform and selects Hawk at HLRS as a simulation target. After the files have been simulated, RWTH Aachen starts the post-processing process at HLRS. In the end the user downloads the post processed data through the platform.

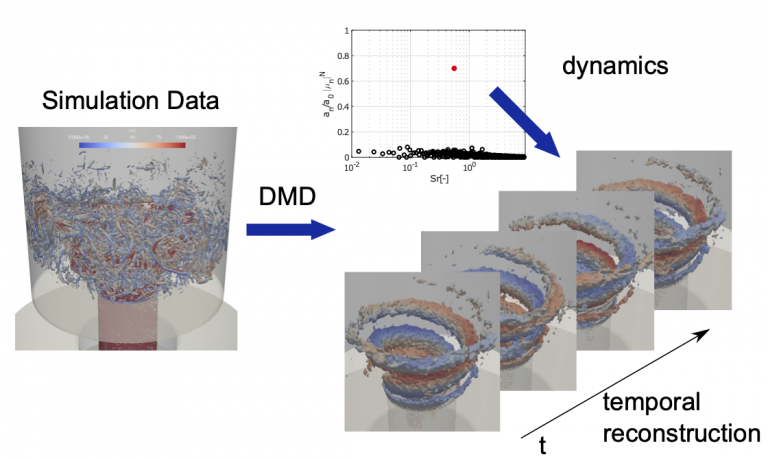

With the increasing availability of computational resources, high-resolution numerical simulations have become an indispensable tool in fundamental academic research as well as engineering product design. A key aspect of the engineering workflow is the reliable and efficient analysis of the rapidly growing high-fidelity flow field data. RWTH develops a modal decomposition toolkit to extract the energetically and dynamically important features, or modes, from the high-dimensional simulation data generated by the code Alya. These modes enable a physical interpretation of the flow in terms of spatio-temporal coherent structures, which are responsible for the bulk of energy and momentum transfer in the flow. Modal decomposition techniques can be used not only for diagnostic purposes, i.e. to extract dominant coherent structures, but also to create a reduced dynamic model with only a small number of degrees of freedom that approximates and anticipates the flow field. The modal decomposition will be executed on the same architecture as the main simulation. Besides providing better physical insights, this will reduce the amount of data that needs to be transferred back to the user.

Scientific Challenge:

Highly accurate, turbulence scale resolving simulations, i.e. large eddy simulations and direct numerical simulations, have become indispensable for scientific and industrial applications. Due to the multi-scale character of the flow field with locally mixed periodic and stochastic flow features, the identification of coherent flow phenomena leading to an excitation of, e.g., structural modes is not straightforward. A sophisticated approach to detect dynamic phenomena in the simulation data is a reduced-order analysis based on dynamic mode decomposition (DMD) or proper orthogonal decomposition (POD).

In this collaborative framework, DMD is used to study unsteady effects and flow dynamics of a swirl-stabilised combustor from high-fidelity large-eddy simulations. The burner consists of plenum, fuel injection, mixing tube and combustion chamber. Air is distributed from the plenum into a radial swirler and an axial jet through a hollow cone. Fuel is injected into a plenum inside the burner through two ports that contain 16 injection holes of 1.6 mm diameter located on the annular ring between the cone and the swirler inlets. The fuel injected from the small holes at high velocity is mixed with the swirled air and the axial jet along a mixing tube of 60 mm length with a diameter of D = 34 mm. At the end of the mixing tube, the mixture expands over a step change with a diameter ratio of 3.1 into a cylindrical combustion chamber. The burner operates at Reynolds number Re = 75,000 with pre-heated air at T_air = 453 K and hydrogen coming at T_H2 = 320 K. The numerical simulations have been conducted on a hybrid unstructured mesh including prisms, tetrahedrons and pyramids, and locally refined in the regions of interest with a total of 63 million cells.

SOLUTION:

The developed Data Exchange & Workflow Portal will be able to simplify or even eliminate these obstacles. First activities have already started. The new platform enables users to easily access the two HLRS clusters, Hawk and Vulcan, from any authorised device and to run their simulations remotely. The portal provides relevant HPC processes for the end users, such as uploading input decks, scheduling workflows, or running HPC jobs.

In order to be able to perform data analytics, i.e. modal decomposition, of the large amounts of data that arise from Exascale simulations, a modal decomposition toolkit has been developed. An efficient and scalable parallelisation concept based on MPI and LAPACK/ScaLAPACK has been used to perform modal decompositions in parallel on large data volumes. Since DMD and POD are data-driven decomposition techniques, the time resolved data has to be read for the whole time interval to be analysed. To handle the large amount of input and output, the software tool has been optimised to effectively read and write the time resolved snapshot data parallelly in time and space. Since different solution data formats are utilised by the computational fluid dynamics community, a flexible modular interface has been developed to easily add data formats of other simulation codes.

The flow field of the investigated combustor exhibits a self-excited flow oscillation known as a precessing vortex core (PVC) at a dimensionless Strouhal Number of Sr=0.55, which can lead to inefficient fuel consumption, high level of noise and eventually combustion hardware damage. To analyse the dynamics of the PVC, DMD is used to extract the large-scale coherent motion from the turbulent flow field characterised by a manifold of different spatial and temporal scales shown in Figure 2 (left). The instantaneous flow field of the turbulent flame is visualised by an iso-surface of the Q-criterion coloured by the absolute velocity. The DMD analysis is performed on the three-dimensional velocity and pressure field using 2000 snapshots. The resulting spectrum of the DMD, showing the amplitude of each mode as a function of the dimensionless frequency is given in Figure 2 (top). One dominant mode, marked by a red dot, at Sr=0.55 matching the dimensionless frequency of the PVC is clearly visible. The temporal reconstruction of the extracted DMD mode showing the extracted PVC vortex is illustrated in Figure 2 (right). It shows the iso-surface of the Q-criterion coloured by the radial velocity.

Scientific impact of this result:

The Data Exchange & Workflow Portal is a mature solution for providing seamless and secure access to high-performance computing resources by end users. The innovative thing about the solution is that it combines the know-how about secure data exchange with an HPC platform. This is fundamental because the combination of know-how provision and secure data exchange between HPC and SMEs is unique. Especially the web interface is very easy to use and many tasks are automated, which leads to a simplification of the whole HPC complex and there is an easier entry for HPC engineers.

In addition, the data reduction programming technology ensures a more intelligent, faster transfer of files. There will be a highly increased performance speed when transferring the same data sets over and over. If the file is already known by the system and there is no need to transfer it again. Only the changed parts need to be exchanged.

The developed data analytics, i.e. modal decomposition, toolkit provides an efficient and user-friendly way to analyse simulation data and extract energetically and dynamically important features, or modes, from complex, high-dimensional flows. To address a broad user community having different backgrounds and expertise in HPC applications, a server/client structure has been implemented allowing an efficient workflow. Using this structure, the actual modal decomposition is done on the server running in parallel on the HPC cluster which is connected via TCP with the graphical user interface (client) running on the local machine. To efficiently visualise the extracted modes and reconstructed flow fields without writing large amounts of data to disk, the modal decomposition server can be connected to a ParaView server/client configuration via Catalyst enabling in-situ visualisation.

Finally, this demonstrator shows an integrated HPC-based solution that can be used for burner design and optimisation using high-fidelity simulations and data analytics through an interactive workflow portal with an efficient data exchange and data transfer strategy.

Benefits for further research:

- Higher HPC customer retention due to less complex HPC environment

- Reduction of HPC complexity due to web frontend

- Shorter training phases for inexperienced users and reduced support effort for HPC centres

- Calculations can be started from anywhere with a secure connection

- Time and cost savings due to a high degree of automation that streamlines the process chain

- Efficient and user-friendly post-processing/ data analytics