- Homepage

- >

- Search Page

- > TREX

- >

FocusCoE at EuroHPC Summit Week 2022

With the support of the FocusCoE project, almost all European HPC Centres of Excellence (CoEs) participated once again in the EuroHPC Summit Week (EHPCSW) this year in Paris, France: the first EHPCSW in person since 2019’s event in Poland. Hosted by the French HPC agency Grand équipement national de calcul intensif (GENCI), the conference was organised by Partnership for Advanced Computing in Europe (PRACE), the European Technology Platform for High-Performance Computing (ETP4HPC), The EuroHPC Joint Undertaking (EuroHPC JU), and the European Commission (EC).As usual, this year’s event gathered the main European HPC stakeholders from technology suppliers and HPC infrastructures to scientific and industrial HPC users in Europe.

At the workshop on the European HPC ecosystem on Tuesday 22 March at 14:45, where the diversity of the ecosystem was presented around the Infrastructure, Applications, and Technology pillars, project coordinator Dr. Guy Lonsdale from Scapos talked about FocusCoE and the CoEs’ common goal.

Later that day from 16:30 until 18:00h, the FocusCoE project hosted a session titled “European HPC CoEs: perspectives for a healthy HPC application eco-system and Exascale” involving most of the EU CoEs. The session discussed the key role of CoEs in the EuroHPC application pillar, focussing on their impact for building a vibrant, healthy HPC application eco-system and on perspectives for Exascale applications. As described by Dr. Andreas Wierse on behalf of EXCELLERAT, “The development is continuous. To prepare companies to make good use of this technology, it’s important to start early. Our task is to ensure continuity from using small systems up to the Exascale, regardless of whether the user comes from a big company or from an SME”.

Keen interest in the agenda was also demonstrated by attendees from HPC related academia and industry filling the hall to standing room only. In light of the call for new EU HPC Centres of Excellence and the increasing return to in-person events like EHPCSW, the high interest in preparing the EU for Exascale has a bright future.

FocusCoE Hosts Intel OneAPI Workshop for the EU HPC CoEs

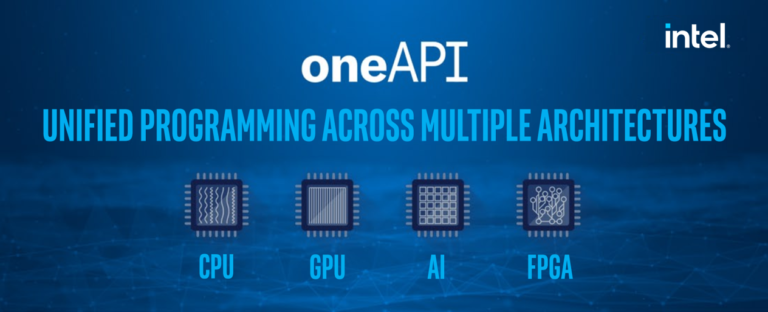

On March 2, 2022 FocusCoE hosted Intel for a workshop introducing the oneAPI development environment. In all, over 40 researchers representing the EU HPC Centres of Excellence (CoEs)were able to attend the single day workshop to gain an overview of OneAPI. The 8 presenters from Intel gave presentations through the day covering the OneAPI vision, design, toolkits, a use case with GROMACS (which is already used by some of the EU HPC CoEs), and specific tools for migration and debugging.

Launched in 2019, the Intel OneAPI cross-industry, open, standards-based unified programming model is being designed to deliver a common developer experience across accelerator architectures. With the time saved designing for specific accelerators, OneAPI is intended to enable faster application performance, more productivity, and greater innovation. As summarized on Intel’s OneAPI website, “Apply your skills to the next innovation, and not to rewriting software for the next hardware platform.” Given the work that EU HPC CoEs are currently doing to optimise codes for Exascale HPC systems, any tools that make this process faster and more efficient can only boost CoEs capacity for innovation and preparedness for future heterogeneous systems.

The OneAPI industry initiative is also encouraging collaboration on the oneAPI specification and compatible oneAPI implementations. To that end, Intel is investing time and expertise into events like this workshop to give researchers the knowledge they need not only to use but help improve OneAPI. The presenters then also make themselves available after the workshop to answer questions from attendees on an ongoing basis. Throughout our event, participants were continuously able to ask questions and get real-time answers as well as offers for further support from software architects, technical consulting engineers, and the researcher who presented a use case. Lastly, the full video and slides from presentations are available below for any CoEs who were unable to attend or would like a second look at the detailed presentations.

SIMAI 2021

The 2020 edition of the bi-annual congress of the Italian Society of Applied and Industrial Mathematics (SIMAI) has been held in Parma, hosted by the University of Parma, from August 30 to September 3, 2021, in a hybrid format (physical and online). The conference aimed to bring together researchers and professionals from academia and industry that are active in the study of mathematical and numerical models and their application to industry and general real-life problems, stimulate interdisciplinary research in applied mathematics, and foster interactions between the scientific community and industry. Six plenary lectures have been covering a wide range of topics. In this edition, a posters session was also organized to broaden the opportunity to disseminate the results of interesting research. A large part of the conference was dedicated to minisymposia, autonomously organized around specific topics by their respective promoters. Furthermore, an Industrial Session gathering together both academic and industrial researchers was organized, particularly with more than 70 industry representatives focusing on mathematical problems encountered in R&D areas.

Within the SIMAI conference, the minisymposium on HPC, European High-Performance Scientific Computing: Opportunities and Challenges for Applied Mathematics” was organized by ENEA within the framework of project FocusCoE, and a contribution to the aforementioned Industrial Session was given through a talk on results on Hydropower production modelling obtained by project EoCoE. In the minisymposium agenda of 3th of September (9:30-12:00), five presentations (20 min + 5 min Q) have been made from CoEs representatives: Ignatio Pagonabarraga (CoE: E-CAM), Alfredo Buttari (CoE: EoCoE), Francesco Buonocore (CoE: EoCoE), Pasqua D’Ambra (CoEs: EoCoE. EUROHPC project:TEXTAROSSA), Tomaso Esposti Ongaro (CoE: ChEESE). The number of sustained participants has been ca. 25.

The FocusCoE contribution within the industrial session of September 1 (17:50-18:15) has been made through a talk of prof. Bruno Majone (CoEs: EoCoE) presented by Andrea Galletti (CoEs: EoCoE) titled “Detailed hydropower production modelling over large-scale domains: the HYPERstreamHS framework”.

CoEs at Teratec Forum 2021 and ISC21

With the support of FocusCoE, a number of HPC CoEs will give short presentations at the virtual PRACE booth in the following two HPC-related events: Teratec Forum 2021 and ISC2021 that will take place towards the end of this month. See the schedule below for more details. Please reserve the slots in your calendars, registration details will be provided on the PRACE website soon!

“We are happy to see that FocusCoE was able to help the HPC CoEs to have a significant presence at this year’s editions of ISC and Teratec Forum, two major HPC events, enabled through our good synergies with PRACE”, says Guy Lonsdale, FocusCoE coordinator.

Teratec Forum 2021 schedule

Date / Event | Time slot CEST | Title | Speaker | Organisation |

Tue 22 June | 11:00 – 11:15 | EoCoE-II: Towards exascale for Energy | Edouard Audit, EoCoE-II coordinator | CEA (France) |

| 14:30 – 14:45 | POP CoE: Free Performance Assessments for the HPC Community | Bernd Mohr | Jülich Supercomputing Centre |

Thu 24 June | 13:45 – 14:00 | EXCELLERAT – paving the way for the evolution towards Exascale | Amgad Dessoky / Sophia Honisch | HLRS |

ISC 2021 schedule

Date / Event | Time slot CEST | Title | Speaker | Organisation |

Thu 24 June | 13:45 – 14:00 | EXCELLERAT – paving the way for the evolution towards Exascale | Amgad Dessoky / Sophia Honisch | HLRS |

Fri 25 June | 11:00 – 11:15 | The Center of Excellence for Exascale in Solid Earth (ChEESE) | Alice-Agnes Gabriel | Geophysik, University of Munich |

| 15:30 – 15:45 | EoCoE-II: Towards exascale for Energy | Edouard Audit, EoCoE-II coordinator | CEA (France) |

Tue 29 June | 11:00 – 11:15 | Towards a maximum utilization of synergies of HPC Competences in Europe | Bastian Koller, HLRS | HLRS |

Wed 30 June | 10:45 -11:00 | CoE | Dr.-Ing. Andreas Lintermann | Jülich Supercomputing Centre, Forschungszentrum Jülich GmbH |

Thu 1 July | 11:00 -11:15 | POP CoE: Free Performance Assessments for the HPC Community | Bernd Mohr | Jülich Supercomputing Centre |

| 14:30 -14:45 | TREX: an innovative view of HPC usage applied to Quantum Monte Carlo simulations | Anthony Scemama (1), William Jalby (2), Cedric Valensi (2), Pablo de Oliveira Castro (2) | (1) Laboratoire de Chimie et Physique Quantiques, CNRS-Université Paul Sabatier, Toulouse, France (2) Université de Versailles St-Quentin-en-Yvelines, Université Paris Saclay, France |

Please register to the short presentations through the PRACE event pages here:

| PRACE Virtual booth at Teratec Forum 2021 | PRACE Virtual booth at ISC2021 |

| prace-ri.eu/event/teratec-forum-2021/ | prace-ri.eu/event/praceisc-2021/ |

List of innovations by the CoEs, spotted by the EU innovation radar

| Title: Biobb, biomolecular modelling building Blocks |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF MANCHESTER – UNITED KINGDOM |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| FUNDACIO INSTITUT DE RECERCA BIOMEDICA (IRB BARCELONA) – SPAIN |

| Title: GROMACS, a versatile package to perform molecular dynamics |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| Title: HADDOCK, a versatile information-driven flexible docking approach for the modelling of biomolecular complexes |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| UNIVERSITEIT UTRECHT – NETHERLANDS |

| Title: PMX, a versatile (bio-) molecular structure manipulation package |

| Market maturity: Exploring |

| Project: BioExcel |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| Title: Faster Than Real Time (FTRT) environment for high-resolution simulations of earthquake generated tsunamis |

| Market maturity: Tech ready |

| Market creation potential: High |

| Project: ChEESE |

| Innovation Topic: Excellent Science |

| UNIVERSIDAD DE MALAGA – SPAIN |

| TECHNISCHE UNIVERSITAET MUENCHEN – GERMANY |

| ISTITUTO NAZIONALE DI GEOFISICA E VULCANOLOGIA – ITALY |

| Title: Probabilistic Seismic Hazard Assessment (PSHA) |

| Market maturity: Exploring |

| Project: ChEESE |

| Innovation Topic: Excellent Science |

| LUDWIG-MAXIMILIANS-UNIVERSITAET MUENCHEN – GERMANY |

| TECHNISCHE UNIVERSITAET MUENCHEN – GERMANY |

| ISTITUTO NAZIONALE DI GEOFISICA E VULCANOLOGIA – ITALY |

| Title: Urgent Computing services for the impact assessment in the immediate aftermath of an earthquake |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: ChEESE |

| Innovation Topic: Excellent Science |

| EIDGENOESSISCHE TECHNISCHE HOCHSCHULE ZUERICH – SWITZERLAND |

| BULL SAS – FRANCE |

| Title: Alya, HemeLB, HemoCell, OpenBF, Palabos-Vertebroplasty simulator, Palabos – Flow Diverter Simulator, BAC, HTMD, Playmolecule, Virtual Assay, CT2S, Insigneo Bone Tissue Suit |

| Market maturity: Exploring |

| Project: CompBioMed |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| UNIVERSITY COLLEGE LONDON – UNITED KINGDOM |

| ACELLERA LABS SL – SPAIN |

| Title: E-CAM software repository |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE – SWITZERLAND |

| UNIVERSITY COLLEGE DUBLIN, NATIONAL UNIVERSITY OF IRELAND, DUBLIN – IRELAND |

| UNITED KINGDOM RESEARCH AND INNOVATION – UNITED KINGDOM |

| Title: E-CAM Training offer on effective use of HPC simulations in quantum chemistry |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE – SWITZERLAND |

| UNIVERSITY COLLEGE DUBLIN, NATIONAL UNIVERSITY OF IRELAND, DUBLIN – IRELAND |

| FREIE UNIVERSITAET BERLIN – GERMANY |

| Title: Improved Simulation Software Packages for Molecular Dynamics |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| SCIENCE AND TECHNOLOGY FACILITIES COUNCIL – UNITED KINGDOM |

| TECHNISCHE UNIVERSITAET WIEN – AUSTRIA |

| UNIVERSITEIT VAN AMSTERDAM – NETHERLANDS |

| Title: Improved software modules for Meso– and multi–scale modelling |

| Market maturity: Exploring |

| Project: E-CAM |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| FREIE UNIVERSITAET BERLIN – GERMANY |

| Title: Code auditing, optimization and performance assessment services for energy-oriented HPC simulations |

| Market maturity: Exploring |

| Project: EoCoE |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMANY |

| Title: Consultancy Services for using HPC Simulations for Energy related applications |

| Market maturity: Exploring |

| Project: EoCoE |

| Innovation Topic: Excellent Science |

| COMMISSARIAT A L ENERGIE ATOMIQUE ET AUX ENERGIES ALTERNATIVES – FRANCE |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMANY |

| Table: New coupled earth system model |

| Market maturity: Tech Ready |

| Project: ESiWACE |

| Innovation Topic: Excellent Science |

| BULL SAS – FRANCE |

| MET OFFICE – UNITED KINGDOM |

| EUROPEAN CENTRE FOR MEDIUM-RANGE WEATHER FORECASTS – UNITED KINGDOM |

| Title:AMR capability and Accelerated Computing in AVBP code |

| Market maturity: Exploring |

| Project: EXCELLERAT |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| CERFACS CENTRE EUROPEEN DE RECHERCHE ET DE FORMATION AVANCEE EN CALCUL SCIENTIFIQUE SOCIETE CIVILE – FRANCE |

| Title: AMR capability in Alya code to enable advanced simulation services for engineering |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: Data Exchange & Workflow Portal: secure, fast and traceable online data transfer between data generators and HPC centers |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: FPGA Acceleration for Exascale Applications based on ALYA and AVBP codes |

| Market maturity: Tech Ready |

| Market creation potential: Noteworthy |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: Open source mode decomposition toolkit for exascale data analysis |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| RHEINISCH-WESTFAELISCHE TECHNISCHE HOCHSCHULE AACHEN – GERMANY |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| SSC SERVICES GMBH – GERMANY |

| Title:Use of GASPI to improve performance of CODA |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| Title: GPU acceleration in TPLS to exploit the GPU-based architectures for Exascale |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| Title: In-Situ Analysis of CFD Simulations |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMAN |

| Title: Interactive in situ visualization in VR |

| Market maturity: Tech Ready |

| Market creation potential: High |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| UNIVERSITY OF STUTTGART – GERMANY |

| Title: Machine Learning Methods for Computational Fluid Dynamics (CFD) Data |

| Market maturity: Tech Ready |

| Market creation potential: Noteworthy |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. – GERMAN |

| Title: Parallel I/O in TPLS using PETSc library |

| Market maturity: Tech Ready |

| Project: Excellerat |

| Innovation Topic: Excellent Science |

| THE UNIVERSITY OF EDINBURGH – UNITED KINGDOM |

| RHEINISCH-WESTFAELISCHE TECHNISCHE HOCHSCHULE AACHEN – GERMANY |

| KUNGLIGA TEKNISKA HOEGSKOLAN – SWEDEN |

| Title: Highly scalable Material Science Simulation Codes |

| Market maturity: Exploring |

| Project: MaX |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| E4 COMPUTER ENGINEERING SPA – ITALY |

| Title: Quantum Simulation as a Service |

| Market maturity: Exploring |

| Market creation potential: Noteworthy |

| Project: MaX |

| Innovation Topic: Excellent Science |

| EIDGENOESSISCHE TECHNISCHE HOCHSCHULE ZUERICH – SWITZERLAND |

| CINECA CONSORZIO INTERUNIVERSITARIO – ITALY |

| Title: Simulation Code Optimisation and Scaling |

| Market maturity: Exploring |

| Project: MaX |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| EIDGENOESSISCHE TECHNISCHE HOCHSCHULE ZUERICH – SWITZERLAND |

| Title: Novel Materials Discovery (NOMAD) Repository |

| Market maturity: Business Ready |

| Project: NoMaD |

| Innovation Topic: Excellent Science |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| HUMBOLDT-UNIVERSITAET ZU BERLIN – GERMANY |

| BAYERISCHE AKADEMIE DER WISSENSCHAFTEN – GERMANY |

| Title: NOMAD Encyclopedia Service: allows users to see, compare, explore, and understand computed materials data |

| Market maturity: Tech Ready |

| Project: NoMaD |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| MAX-PLANCK-GESELLSCHAFT ZUR FORDERUNG DER WISSENSCHAFTEN EV – GERMANY |

| HUMBOLDT-UNIVERSITAET ZU BERLIN – GERMANY |

| Title: An ICT platform prototype for systematically tracing public sentiment, its evolution towards a policy, its components and arguments linked to them. |

| Market maturity: Tech Ready |

| Project: NOMAD |

| Innovation Topic: Smart & Sustainable Society |

| ATHENS TECHNOLOGY CENTER ANONYMI BIOMICHANIKI EMPORIKI KAI TECHNIKI ETAIREIA EFARMOGON YPSILIS TECHNOLOGIAS – GREECE |

| NATIONAL CENTER FOR SCIENTIFIC RESEARCH “DEMOKRITOS” – GREECE |

| CRITICAL PUBLICS LTD – UNITED KINGDOM |

| Title: Customer-Specific Performance Analysis for Parallel Codes |

| Market maturity: Exploring |

| Project: POP |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| UNIVERSITAET STUTTGART – GERMANY |

| Title: Repository of powerful tools (codes, pattern/best-practice descriptions, experimental results) for the HPC community |

| Market maturity: Exploring |

| Project: POP |

| Innovation Topic: Excellent Science |

| BARCELONA SUPERCOMPUTING CENTER – CENTRO NACIONAL DE SUPERCOMPUTACION – SPAIN |

| TERATEC – FRANCE |

Watch The Presentations Of The First CoE Joint Technical Workshop

Watch the recordings of the presentations from the first technical CoE workshop. The virtual event was organized by the three HPC Centres of Excellence ChEESE, EXCELLRAT and HiDALGO. The agenda for the workshop was structured in these four session:

Session 1: Load balancing

Session 2: In situ and remote visualisation

Session 3: Co-Design

Session 4: GPU Porting

You can also download a PDF version for each of the recorded presentations. The workshop took place on January 27 – 29, 2021.

Session 1: Load balancing

Title: Introduction by chairperson

Speaker: Ricard Borell (BSC)

Title: Load balancing strategies used in AVBP

Speaker: Gabriel Staffelbach (CERFACS)

Title: Addressing load balancing challenges due to fluctuating performance and non-uniform workload in SeisSol and ExaHyPE

Speaker: Michael Bader (TUM)

Title: On Discrete Load Balancing with Diffusion Type Algorithms

Speaker: Robert Elsäßer (PLUS)

Session 2: In situ and remote visualisation

Title: Introduction by chairperson

Speaker: Lorenzo Zanon & Anna Mack (HLRS)

Title: An introduction to the use of in-situ analysis in HPC

Speaker: Miguel Zavala (KTH)

Title: In situ visualisation service in Prace6IP

Speaker: Simone Bnà (CINECA)

Title: Web-based Visualisation of air pollution simulation with COVISE

Speaker: Anna Mack (HLRS)

Title: Virtual Twins, Smart Cities and Smart Citizens

Speaker: Leyla Kern, Uwe Wössner, Fabian Dembski (HLRS)

Session 3: Co-Design

Title: Introduction by chairperson, and Excellerat’s Co-Design Methodology

Speaker: Gavin Pringle (EPCC)

Title: Accelerating codes on reconfigurable architectures

Speaker: Nick Brown (EPCC)

Title: Benchmarking of Current Architectures for Improvements

Speaker: Nikela Papadopoulou (ICCS)

Title: Example Co-design Approach with the Seissol and Specfem3D Practical cases

Speaker: Georges-Emmanuel Moulard (ATOS)

Title: Exploitation of Exascale Systems for Open-Source Computational Fluid Dynamics by Mainstream Industry

Speaker: Ivan Spisso (CINECA)

Session 4: GPU Porting

Title: Introduction

Speaker: Giorgio Amati (CINECA)

Title: GPU Porting and strategies by ChEESE

Speaker: Piero Lanucara (CINECA)

Title: GPU porting by third party library

Speaker: Simone Bnà (CINECA)

Video of the Week: ChEESE Women in Science

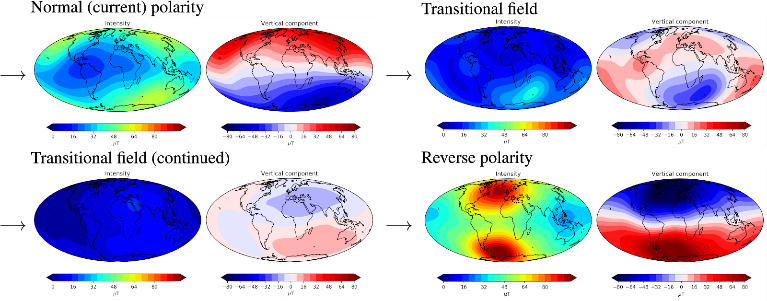

Geomagnetic forecasts

A Use Case by

Short description

Objectives

Technologies

Workflow

XSHELLS produces simulated reversals which are subsequently analysed and assessed using the parallel python processing chain. Through ChEESE we are working to orchestrate this workflow using the WMS_light software developed within the ChEESE consortium.

Software involved

Post-processing: Python 3

External library: SHTns

Collaborating Institutions

IPGP, CNRS

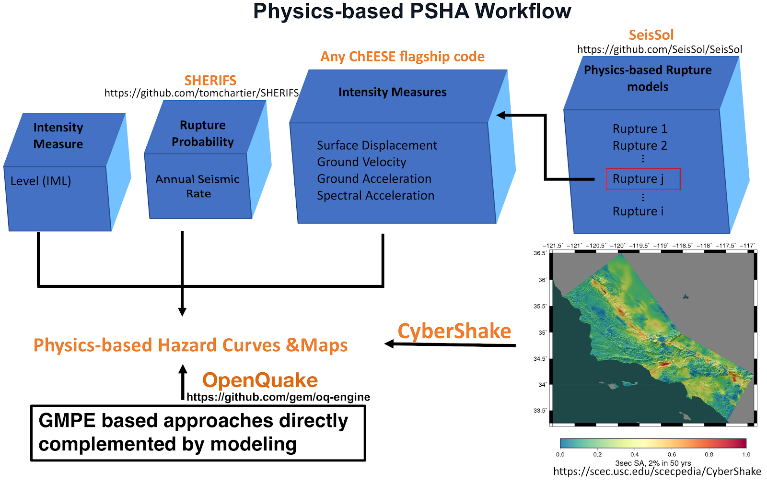

Physics-Based Probabilistic Seismic Hazard Assessment (PSHA)

Short description

Results & Achievements

Objectives

Technologies

Workflow

The workflow of this pilot is shown in Figure 1.

To use the SeisSol code to run fully non-linear dynamic rupture simulations, accounting for various fault geometries, 3D velocity structures, off-fault plasticity, and model parameters uncertainties, to build a fully physics-based dynamic rupture database of mechanically plausible scenarios.

Then the linked post-processing python codes are used to extract ground shakings (PGD, PGV, PGA and SA in different periods) from the surface output of SeisSol simulations to build a ground shaking database.

SHERIFS uses a logic tree method, with the input of the fault to fault ruptures from dynamic rupture database, converting the slip rate to the annual seismic rate given the geometry of the fault system.

With the rupture probability estimation from SHERIFS, and ground shakings from the SeisSol simulations, we can generate the hazard curves for selected site locations and hazard maps for the study region.

In addition, the OpenQuake can use the physics-based ground motion models/prediction equations, established with the ground shaking database from fully dynamic rupture simulations. And the Cybershake, which is based on the kinematic simulations, to perform the PSHA and complement the fully physics-based PSHA.

Software involved

SeisSol (LMU)

ExaHyPE (TUM)

AWP-ODC (SCEC)

SHERIFS (Fault2SHA and GEM)

sam(oa)² (TUM)

OpenQuake (GEM): https://github.com/gem/oq-engine

Pre-processing:

Mesh generation tools: Gmsh (open source), Simmetrix/SimModeler (free for academic institution), PUMGen

Post-processing & Visualization: Paraview, python tools

Collaborating Institutions

IMO, BSC, TUM, INGV, SCEC, GEM, FAULT2SHA, Icelandic Civil Protection, Italian Civil Protection

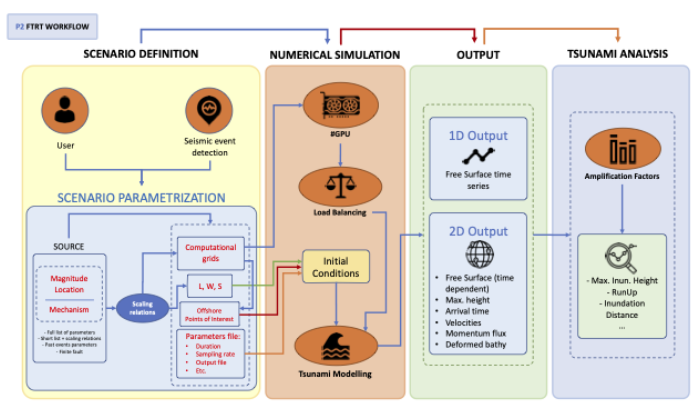

Faster Than Real-Time Tsunami Simulations

Short description

Results & Achievements

Objectives

Technologies

Workflow

The Faster-Than-Real-Time (FTRT) prototype for extremely fast and robust tsunami simulations is based upon GPU/multi-GPU (NVIDIA) architectures and is able to use earthquake information from different locations and with heterogeneous content (full Okada parameter set, hypocenter and magnitude plus Wells and Coppersmith (1994)). Using these inhomogeneous inputs, and according to the FTRT workflow (see Fig. 1), tsunami computations are launched for a single scenario or a set of related scenarios for the same event. Basically, the automated retrieval of earthquake information is sent to the system and on-the-fly simulations are automatically launched. Therefore, several scenarios are computed at the same time. As updated information about the source is provided, new simulations should be launched. As output, several options are available tailored to the end-user needs, selecting among: sea surface height and its maximum, simulated isochrones and arrival times to the coastal areas, estimated tsunami coastal wave height, times series at Points of Interest (POIs) and oceanographic sensors.

A first successful implementation has been done for the Emergency Response Coordination Centre (ERCC), a service provided by the ARISTOTLE-ENHSP Project. The system implemented for ARISTOTLE follows the general workflow presented in Figure 1. Currently, in this system, a single source is used to assess the hazard and the computational grids are predefined. The computed wall-clock time is provided for each experiment and the outputs of the simulation are maximum water height and arrival times on the whole domain, and water height time-series on a set of selected POIs, predefined for each domain.

A library of Python codes are used to generate the input data required to run HySEA codes and to extract the topo-bathymetric data and construct the grids used by HySEA codes.

Software involved

Tsunami-HySEA has been successfully tested with the following tools and versions:

Compilers: GNU C++ compiler 7.3.0 or 8.4.0, OpenMPI 4.0.1, Spectrum MPI 10.3.1, CUDA 10.1 or 10.2

Management tools: CMake 3.9.6 or 3.11.4

External/third party libraries: NetCDF 4.6.1 or 4.7.3, PnetCDF 1.11.2 or 1.12.0

Pre-processing:

Nesting mesh generation tools.

In-house developed python tools for pre-processing purposes.

Visualization tools:

In-house developed python tools.

Collaborating Institutions

UMA, INGV, NGI, IGN, PMEL/NOAA (with a role in pilot’s development).

Other institutions benefiting from use case results with which we collaborate:

IEO, IHC, IGME, IHM, CSIC, CCS, Junta de Andalucía (all Spain); Italian Civil Protection, Seismic network of Puerto Rico (US), SINAMOT (Costa Rica), SHOA and UTFSM (Chile), GEUS (Denmark), JRC (EC), University of Malta, INCOIS (India), SGN (Dominican Republic), UNESCO, NCEI/NOAA (US), ICG/NEAMTWS, ICG/CARIBE-EWS, among others.