- Homepage

- >

- Search Page

- > Use Case

- >

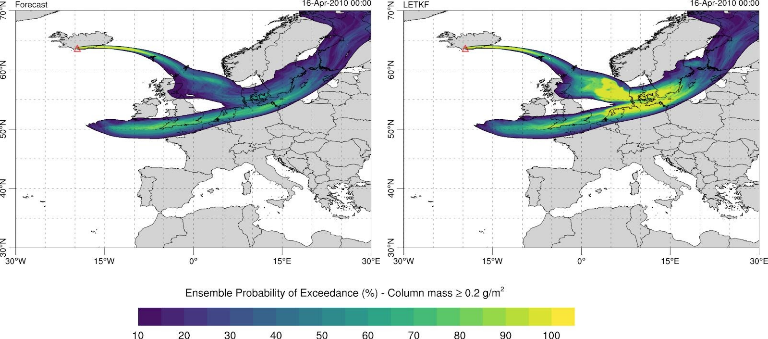

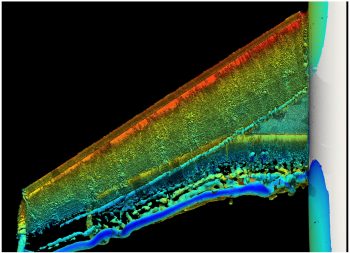

High-Resolution Volcanic Ash Dispersal Forecast

Short description

Results & Achievements

Objectives

Technologies

Workflow

Use case workflow includes the following components:

The download and pre-process of required meteorological data.

The download of raw satellite data and the cloud mass quantitative retrievals (SEVIRI retrievals at 0.1º resolution, 1-hour frequency).

The ensemble forecast execution using the FALL3D model (i.e. the HPC component of the workflow)

No WMS available yet (work in progress)

Software involved

Use Case Owner

Arnau Folch

Barcelona Supercomputing Center-Centro Nacional de Supercomputación (BSC-CNS)

Collaborating Institutions

BSC

INGV

IMO

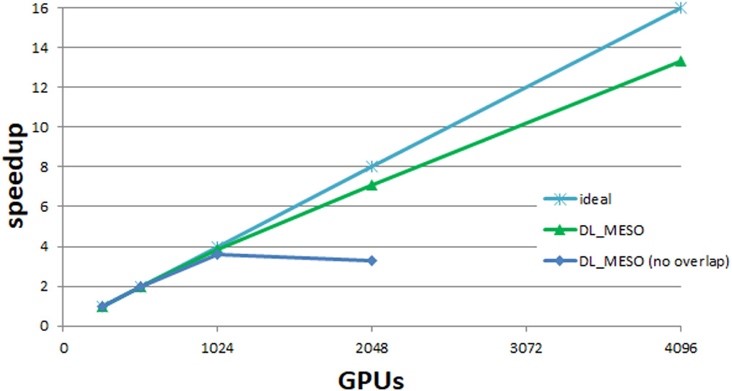

Mesoscale simulation of billion atom complex systems using thousands of GPGPUS's

Short description

Results & Achievements

Objectives

Use Case Owner

Dr. Jony Castagna, computer scientist at UKRI STFC Daresbury Laboratory, E-CAM programmer

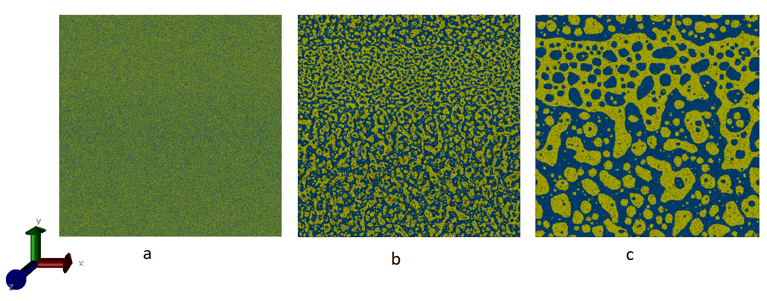

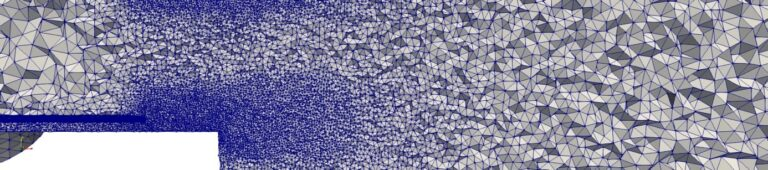

EXCELLERAT: Enabling parallel mesh adaptation with Treeadapt

A Use Case by

Short description

Results & Achievements

Objectives

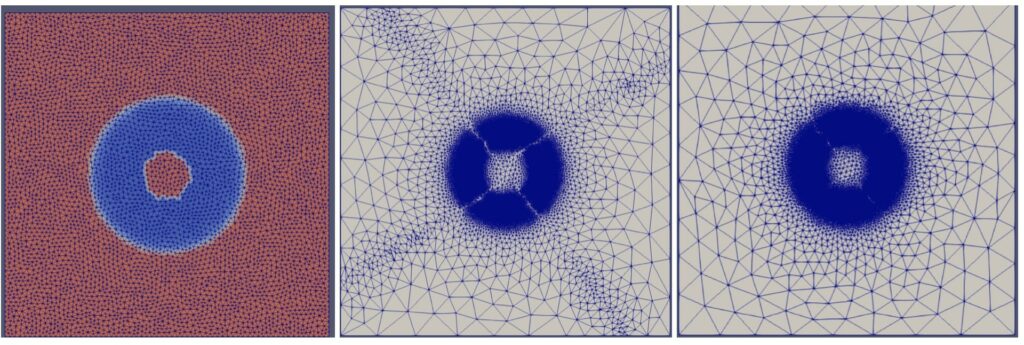

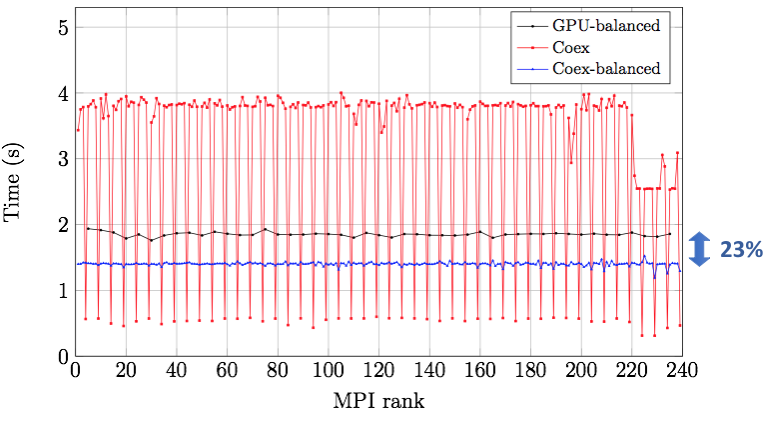

Full airplane simulations on Heterogeneous Architectures

A solution based on Dynamic Load Balancing

A Use Case by

Short description

Results & Achievements

Objectives

Technologies

Alya CFD code

Use Case Owner

Barcelona Supercomputing Center-Centro Nacional de Supercomputación (BSC-CNS)

Collaborating Institutions

Barcelona Supercomputing Center (BSC)