- Homepage

- >

- Search Page

- > Use Case

- >

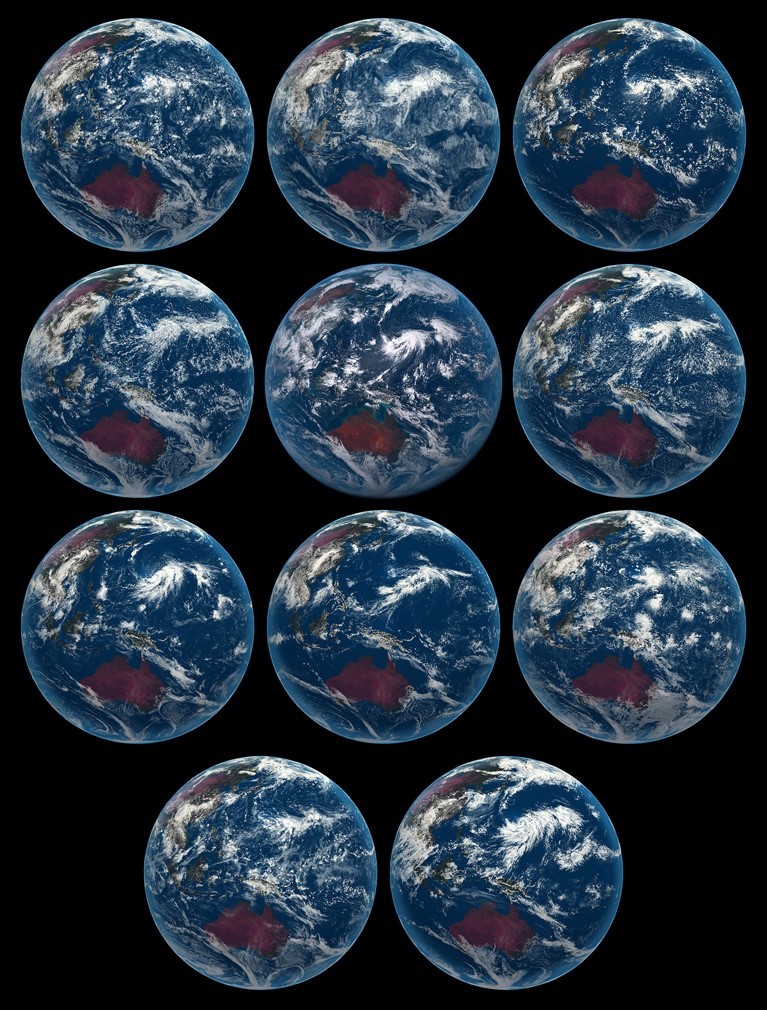

DYAMOND intercomparison project for storm-resolving global weather and climate models

Short description

Results & Achievements

Objectives

Technologies

CDO, ParaView, jupyter, server-side processing

Collaborating Institutions

DKRZ, MPI-M (and many others)

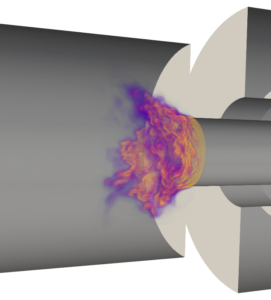

Prediction of pollutants and design of low-emission burners

Short description

Objectives

Technologies

CLIO, Alya, Nek5000, OpenFOAM, PRECISE_UNS

Use Case Owner

Barcelona Supercomputing Center (BSC)

Collaborating Institutions

BSC, RWTH, TUE, UCAM, TUD, ETHZ, AUTH

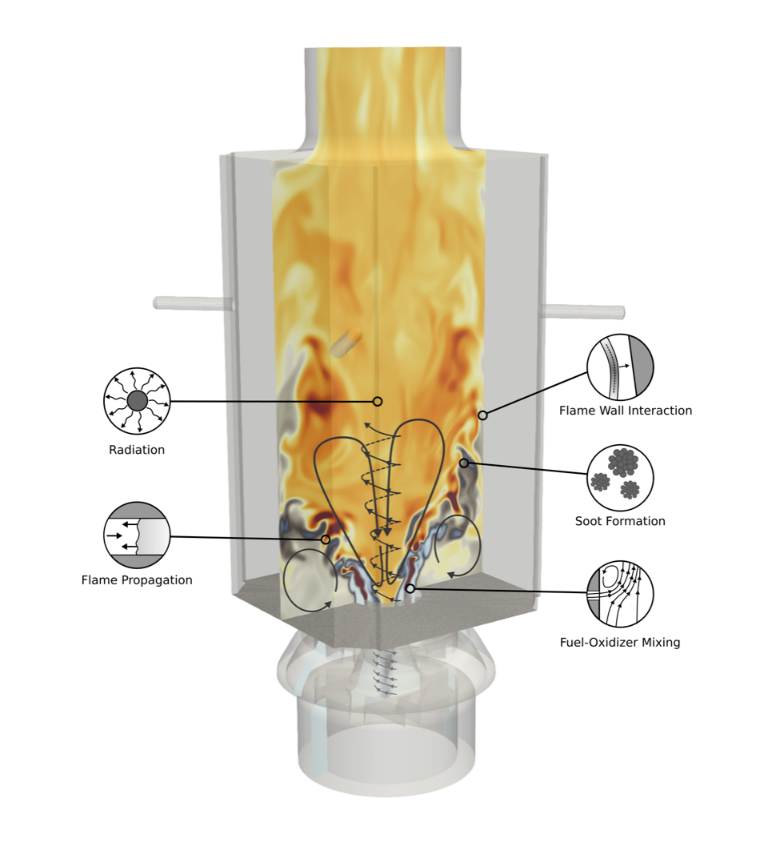

Prediction of soot formation in practical applications

Short description

Objectives

Technologies

CLIO, OpenFOAM, PRECISE_UNS, Alya, Nek5000

Use Case Owner

Barcelona Supercomputing Center (BSC)

Collaborating Institutions

UCAM, TUD, TUE, AUTH, ETHZ

Optimization of Earth System Models on the path to the new generation of Exascale high-performance computing systems

A Use Case by

Short description

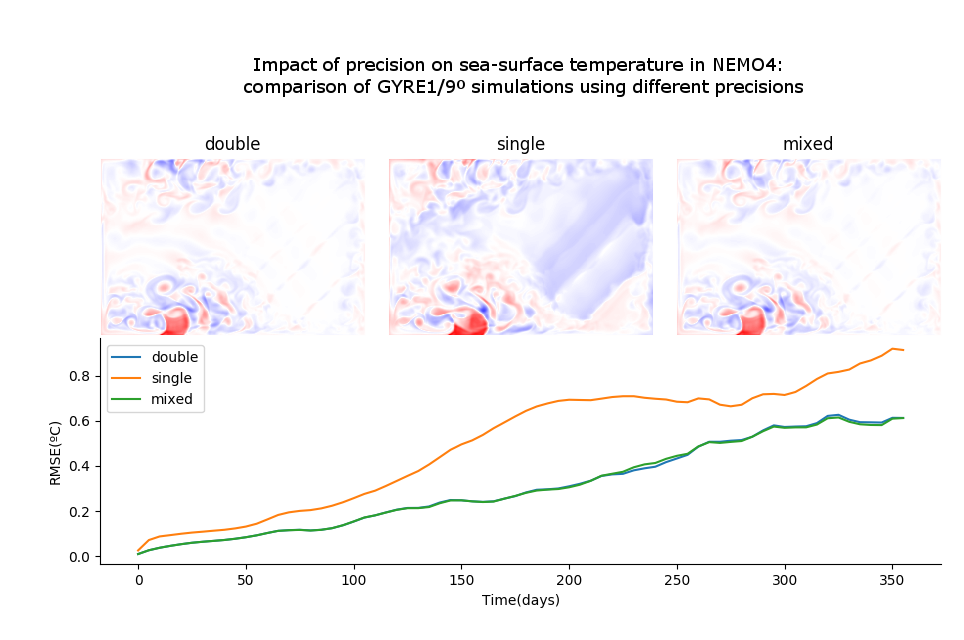

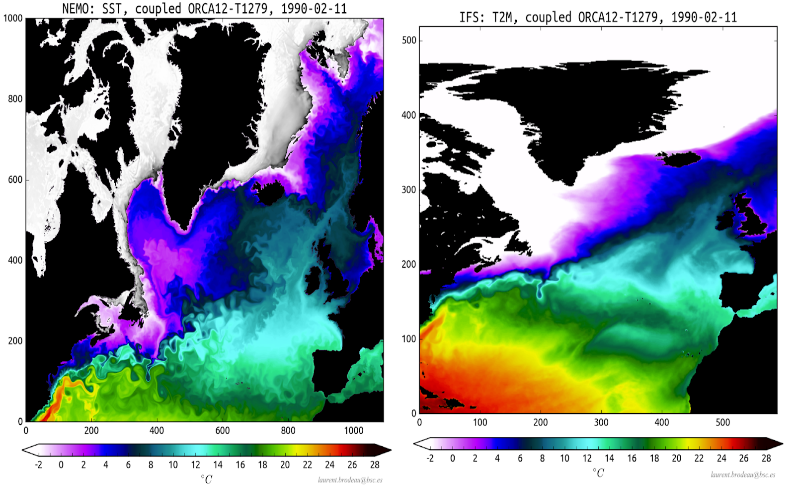

Results & Achievements

Objectives

Use Case Owner

Barcelona Supercomputing Center-Centro Nacional de Supercomputación (BSC-CNS)

Mario Acosta: mario.acosta@bsc.es

Kim Serradell: kim.serradell@bsc.es

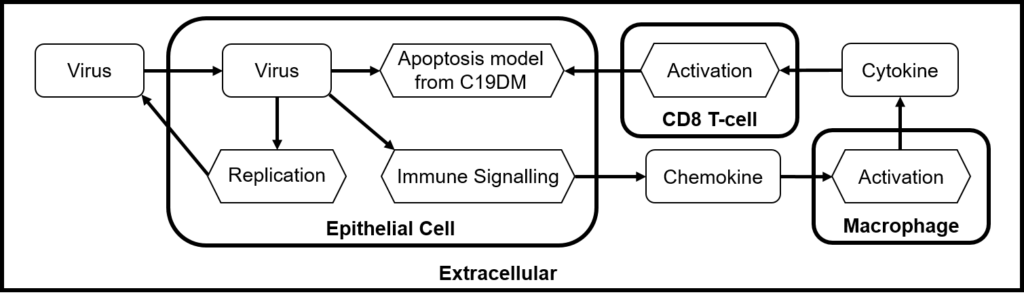

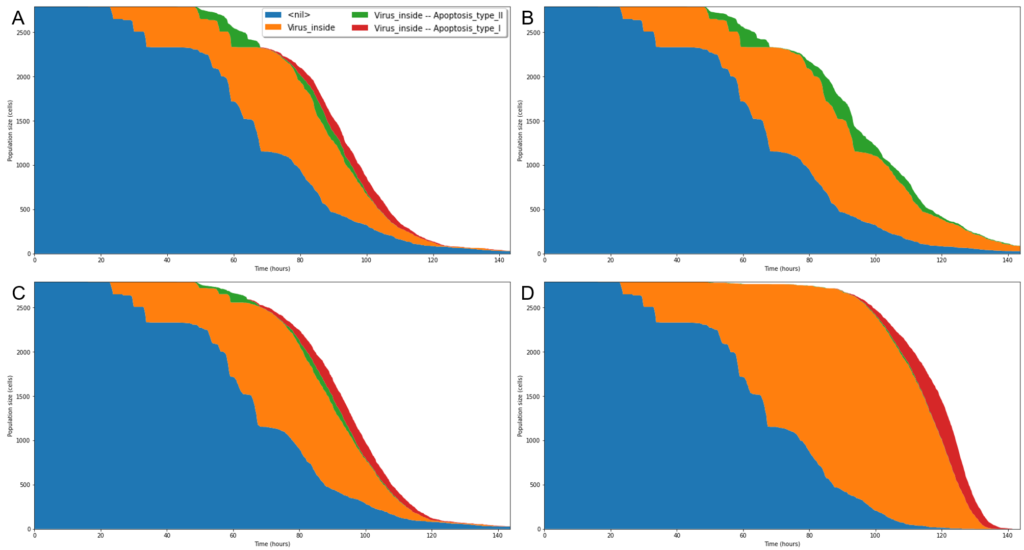

HPC-enabled multiscale simulation helps uncover mechanistic insights of the SARS-CoV-2 infection

Short description

Results & Achievements

Objectives

Use Case Owner

Barcelona Supercomputing Center-Centro Nacional de Supercomputación (BSC-CNS)

Increasing accuracy in the automotive field simulations

Short description

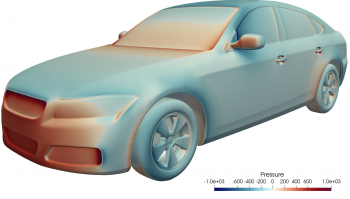

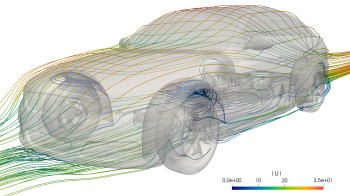

Results & Achievements

Objectives

Collaborating Institutions

–

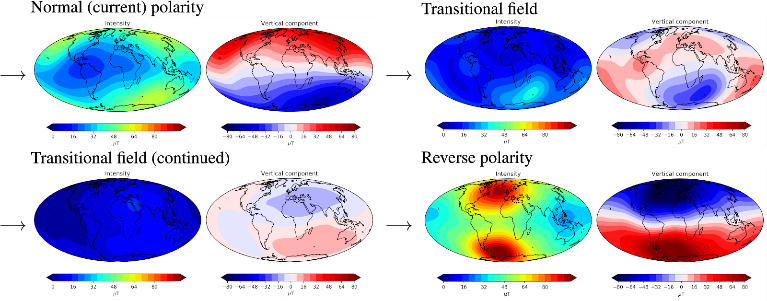

Geomagnetic forecasts

A Use Case by

Short description

Objectives

Technologies

Workflow

XSHELLS produces simulated reversals which are subsequently analysed and assessed using the parallel python processing chain. Through ChEESE we are working to orchestrate this workflow using the WMS_light software developed within the ChEESE consortium.

Software involved

Post-processing: Python 3

External library: SHTns

Collaborating Institutions

IPGP, CNRS

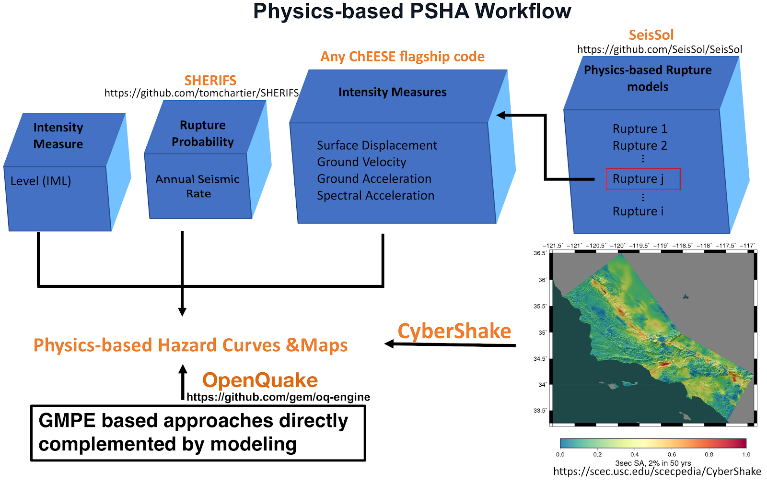

Physics-Based Probabilistic Seismic Hazard Assessment (PSHA)

Short description

Results & Achievements

Objectives

Technologies

Workflow

The workflow of this pilot is shown in Figure 1.

To use the SeisSol code to run fully non-linear dynamic rupture simulations, accounting for various fault geometries, 3D velocity structures, off-fault plasticity, and model parameters uncertainties, to build a fully physics-based dynamic rupture database of mechanically plausible scenarios.

Then the linked post-processing python codes are used to extract ground shakings (PGD, PGV, PGA and SA in different periods) from the surface output of SeisSol simulations to build a ground shaking database.

SHERIFS uses a logic tree method, with the input of the fault to fault ruptures from dynamic rupture database, converting the slip rate to the annual seismic rate given the geometry of the fault system.

With the rupture probability estimation from SHERIFS, and ground shakings from the SeisSol simulations, we can generate the hazard curves for selected site locations and hazard maps for the study region.

In addition, the OpenQuake can use the physics-based ground motion models/prediction equations, established with the ground shaking database from fully dynamic rupture simulations. And the Cybershake, which is based on the kinematic simulations, to perform the PSHA and complement the fully physics-based PSHA.

Software involved

SeisSol (LMU)

ExaHyPE (TUM)

AWP-ODC (SCEC)

SHERIFS (Fault2SHA and GEM)

sam(oa)² (TUM)

OpenQuake (GEM): https://github.com/gem/oq-engine

Pre-processing:

Mesh generation tools: Gmsh (open source), Simmetrix/SimModeler (free for academic institution), PUMGen

Post-processing & Visualization: Paraview, python tools

Collaborating Institutions

IMO, BSC, TUM, INGV, SCEC, GEM, FAULT2SHA, Icelandic Civil Protection, Italian Civil Protection

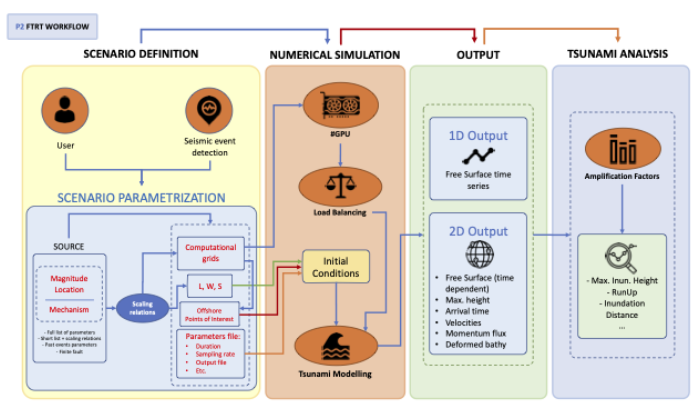

Faster Than Real-Time Tsunami Simulations

Short description

Results & Achievements

Objectives

Technologies

Workflow

The Faster-Than-Real-Time (FTRT) prototype for extremely fast and robust tsunami simulations is based upon GPU/multi-GPU (NVIDIA) architectures and is able to use earthquake information from different locations and with heterogeneous content (full Okada parameter set, hypocenter and magnitude plus Wells and Coppersmith (1994)). Using these inhomogeneous inputs, and according to the FTRT workflow (see Fig. 1), tsunami computations are launched for a single scenario or a set of related scenarios for the same event. Basically, the automated retrieval of earthquake information is sent to the system and on-the-fly simulations are automatically launched. Therefore, several scenarios are computed at the same time. As updated information about the source is provided, new simulations should be launched. As output, several options are available tailored to the end-user needs, selecting among: sea surface height and its maximum, simulated isochrones and arrival times to the coastal areas, estimated tsunami coastal wave height, times series at Points of Interest (POIs) and oceanographic sensors.

A first successful implementation has been done for the Emergency Response Coordination Centre (ERCC), a service provided by the ARISTOTLE-ENHSP Project. The system implemented for ARISTOTLE follows the general workflow presented in Figure 1. Currently, in this system, a single source is used to assess the hazard and the computational grids are predefined. The computed wall-clock time is provided for each experiment and the outputs of the simulation are maximum water height and arrival times on the whole domain, and water height time-series on a set of selected POIs, predefined for each domain.

A library of Python codes are used to generate the input data required to run HySEA codes and to extract the topo-bathymetric data and construct the grids used by HySEA codes.

Software involved

Tsunami-HySEA has been successfully tested with the following tools and versions:

Compilers: GNU C++ compiler 7.3.0 or 8.4.0, OpenMPI 4.0.1, Spectrum MPI 10.3.1, CUDA 10.1 or 10.2

Management tools: CMake 3.9.6 or 3.11.4

External/third party libraries: NetCDF 4.6.1 or 4.7.3, PnetCDF 1.11.2 or 1.12.0

Pre-processing:

Nesting mesh generation tools.

In-house developed python tools for pre-processing purposes.

Visualization tools:

In-house developed python tools.

Collaborating Institutions

UMA, INGV, NGI, IGN, PMEL/NOAA (with a role in pilot’s development).

Other institutions benefiting from use case results with which we collaborate:

IEO, IHC, IGME, IHM, CSIC, CCS, Junta de Andalucía (all Spain); Italian Civil Protection, Seismic network of Puerto Rico (US), SINAMOT (Costa Rica), SHOA and UTFSM (Chile), GEUS (Denmark), JRC (EC), University of Malta, INCOIS (India), SGN (Dominican Republic), UNESCO, NCEI/NOAA (US), ICG/NEAMTWS, ICG/CARIBE-EWS, among others.

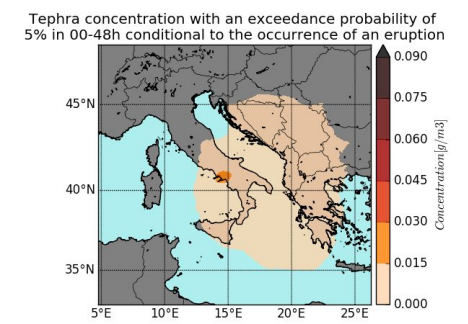

Probabilistic Volcanic Hazard Assessment (PVHA)

Short description

Results & Achievements

Objectives

Technologies

Workflow

PVHA_WF_st fetches the monitoring data (seismic and deformation) and, together with the configuration file of the volcano, calculates the eruptive forecasting (probability curves and vent opening positions) and uses the output file from alphabeta_MPI.py to create the volcanic hazard probabilities and maps.

PVHA_WF_lt uses the configuration file of the volcano to calculate the eruptive forecasting and, together with the output file from alphabeta_MPI.py, creates the volcanic hazard probabilities and maps.

Meteo data download process is fully automated. PVHA_WF_st and PVHA_WF_lt connect to the Climate Data Store (Copernicus data server) and download the meteorological data associated with a specified analysis grid. These data will later be used to obtain the results of tephra deposition by FALL3D.

Software involved

Use Case Owner

Laura Sandri

INGV Bologna

Collaborating Institutions

INGV

BSC

IMO